Un article de lcgwiki.

DDM monitoring

Logbook

- In LYONDISK, files older than 24 hours are deleted (not LFC entries). Since datasets are destroyed at CERN 24 hours after its creation, subscription to T2s are done only if datasets are younger than 0.8 days.

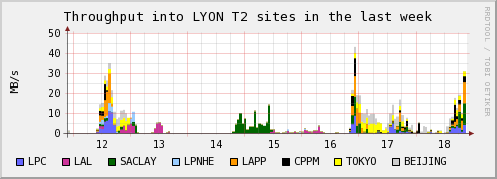

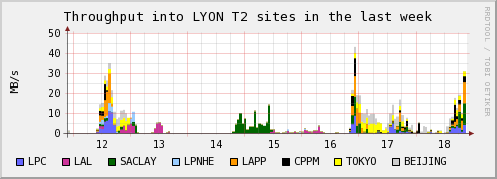

- 18 October

- End of SC4 test. Switch to site Functionnal test the friday after.

- As soon as T0-T1 transfers stopped, the T1-T2 total rate reached 60 MB/s

- 17 October

- Good transfer rate between during the night and morning but only to LYONTAPE. In the afternoon, DDM tried to transfer only to LYONDISK. Rate is much lower but not a lot of errors

- New tool to ask for replications from T1 to T2s using dataset only once (could be possible that each dataset is sent to N sites). But the global rate is not that good

- According to Lionel, dcache gridftp connections needs to be reinitiliased from time to time (not more than once per day)

- 14 October

- Small summary on the DDM problems: Number of processes increasing continuously on the VObox until the VO hangs

- and needs to be rebooted.

- Problem is related to the CRON managing the different processes of DDM file transfer; Some processes are getting ** so slow that other processes are send before the previous one was finished and there is accumulation which

- increases rapidly with time. Still trying to understand the causes.

- 13 October

- Still transfers from other VOs (also in other sites.). Need monitoring tools especially for T1-T2

- Tried to transfer to LAL only. Reached 5 MB/s

- IN2P3-GRIF channel is shared equally between 5 VOs (LHC+ops) with 3 concurrent files in FTS. Number of concurrent files was increased to 10 and ATLAS share was increased. But simultaneous transfer to 3 GRIFs sites not working. Need to do a test with one GRIF site only.

- Miguel Branco gives high priority to T0-T1 transfer in DDM. No T1-T2 transfer so no test of LAL with new FTS configuration

- SC4 will continue one more week then one week of SF test then restart SC4 to reach the 800 MB/s target.

- 12 October

- Again T1-T2 transfers. Again CPPM is full and again bad transfer rate to GRIF

- According to DDM team, FTS transfer queues between T0 and LYON are full but the rate is low (50-60 MB/s). FTS parameters were changed to cope with 3 VOs transfering to Lyon (ATLAS,ALICE and CMS). All dcache gridftp were not used for ATLAS pool but was used for CMS. The CMS share was decreased to adapt to the dcache configuration in Lyon for each VO.

- 11 October : Another chaotic day

- DDM seemed to be stuck

- Mail of Ghita to DDM expert about the python implementation

- VOBOX in LYON rebooted

- Transfered data publication done in short periods

- Around 22:00, DDM was running again but nothing is published

- Stop T1-T2 transfers untill all SC4 files on T2s are removed

- 10 October

- Smooth transfers during the night.

- Problems with CPPM (FTS says the disk is fullbut the publication says different thing)

- No efficient FTS transfer to GRIF (LAL and LPNHE)

- HPSS in maintenance in LYON (should not affect dcache if does not last too long)

- Around 12:00, FTS server in Lyon was not able to get the status of the job. After some time, the transfers were automatically resubmitted but failed because the physical files already existed.

- 2 TB added for ATLAS in Saclay but FTS was not able to access the 1.8 TB opened to all VOs.

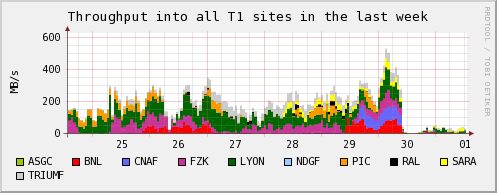

- 9 October

- No transfer T0-T1 since 8:00. Reason is Failed to get proxy certificate from myproxy-fts.cern.ch . Reason is ERROR from server: Credentials do not existUnable to retrieve credential information

- Problem of Miguel's certificate solved in the afternoon (use David Cameron's certificate)

- Database recreated for LYON and TIER2S

- In parallel to SC4, transfer calib0_csc11 files to LYONTAPE

- All SC4 files written to LYONDISK are transfered to all TIER2S (except SACLAY (disk still full)). A cron will be used during the night.

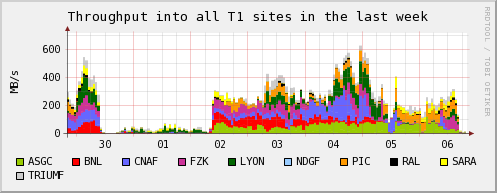

- 6-8 October :

- Requested T1-T2 are not done by DDM

- Transfer CERN-LYON OK (Stable for LYONTAPE but chaotic for LYONDISK for a total rate of 50-100 MB/s) since Sunday midnight (Main failures : State from FTS: Failed; Retries: 4; Reason: Transfer failed. ERROR an end-of-file was reached )

- 6 October :

- Problems of dcache solved

- One more week of SC4 tests

- 5 October:

- Transfer problems LYON->GRIF; investigating

- Problems with dcache in LYON

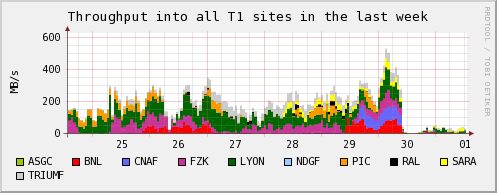

- 4 October:

- Many transfers to Lyon finish with State from FTS: Failed; Retries: 4; Reason: Transfer failed. ERROR an end-of-file was reached (Problem solved by CERN in the afternoon)

- Good efficiency for transfers CERN-LYON

- Transfer T1-> T2 to all sites except CPPM and SACLAY (SE full)

- 15:51 Update the VOBOX to glite 3.0.4

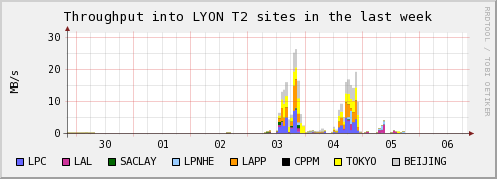

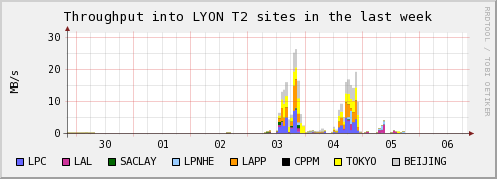

- 3 October

- dcache for SC4 tests back in operation

- Start transfer from LYONDISK to T2s (try to follow online)

- Since dataset are destroyed at CERN after 24 hours, only datasets younger than 12 hours are transfered to T2s.

- Bad transfer rate to GRIF (FTS never finish for the 3 ATLAS sites)

- SE in CPPM is full

- 20-30 MB/s rate for 4 sites

- 2 October :

- Problems with dcache in Lyon

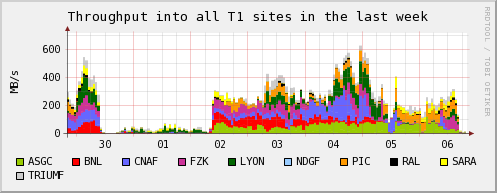

- 30 September-1 October :

- T0-T1 transfer chaotic due to problems for DDM to get FTS messages (use CERN FTS server) (then transfers are killed).

- Status after one week :

- Transfer ran smoothly with the usual problem of LFC access speed

- No T1-T2 transfer

- 24 September: Upgrade of DDM software. VOBOX activity much lower.

Page principale du Twiki ATLAS