Atlas:SC4

Sommaire

Bienvenue sur la page Atlas SC4 LCG-France

Welcome to the LCG-France Atlas SC4 page

Compte rendu de la réunion SC4 ATLAS au CERN du 9 Juin (S.Jézéquel, G. Rahal) (written in french)

- T0 Role(CERN)

- Produce dummy files with 1 to 2 GB size(RAW, ESD et AOD) (see T0 Twiki)

- Initiate T0->T1 transfers

- FTS server sents files to Lyon choosing between 'TAPE' (RAW 43,2 Mo/s) or 'DISK' (ESD,AOD 23+20 Mo/s) areas

- T1 Role (CCIN2P3)

- Get files from T0 (dedicated dcache area: L. Schwarz)

- Provides LFC (lfc-atlas.in2p3.fr) and FTS service (cclcgftsli01.in2p3.fr) (D. Bouvet)

- Send all AODs to each T2 (20 Mo/s) using Lyon FTS server

- Regurlarly cleanup files

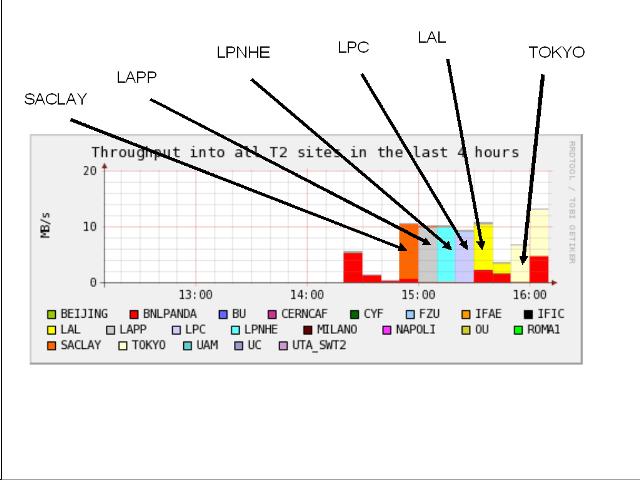

- T2 Role (BEIJING, LAL, LAPP, LPC, LPNHE, SACLAY, TOKYO)

- Get files from T1 (Lyon). Files on the T2 are written in /home/atlas/sc4tier0/...

- Clean-up the files (?)

- Other roles

- ATLAS (S. Jézéquel) : Initiate T1->T2 transfers

Information from DDM monitoring

- http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/rrd/plots/stack4h.png http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/rrd/plots/stack4ht2.png

- http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/rrd/plots/stackday.png

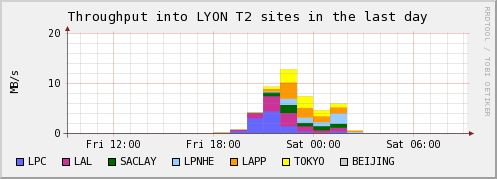

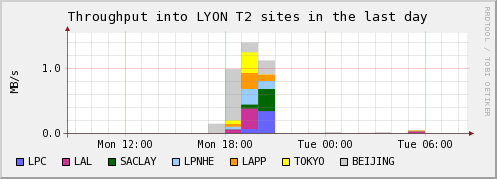

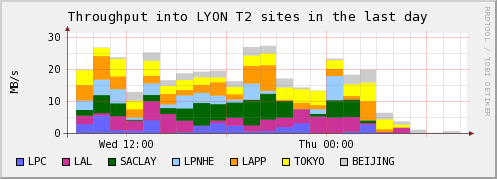

- http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/rrd/plots/stackdayLYON.png

- http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/rrd/plots/stackdayt2.png

- http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/rrd/plots/stackweek.png

- http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/rrd/plots/stackweekLYON.png

- http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/rrd/plots/stackweekt2.png

--

- Transfer rates for LYON and associated T2s (validated files by DDM):

Information from FTS monitoring

- T1->T2 :

- 15 concurrent files and 10 streams for LYON-TOKYO

- 5 concurrent files and 5 streams for LYON-BEIJING (SE not enough powerful for 15/15 )

- 10 concurrent files and 10 streams for LYON-French T2s (LAL, LPNHE, LPC, SACLAY) except for LAPP (5 concurrent files and 1 stream)

Information from dCache monitoring (provided by Lyon)

- Monitoring of dcache for SC4 areas

- LYONDISK : 25 gridftp concurrent access maximum

- LYONTAPE : 10 gridft concurrent access maximum

VOBOX Configuration

- 4 processors 3 GHz

- 4 GB of memory ( 2 GB dedicated to SWAP)

- The monitoring of the Vobox daily, weekly and monthly can be found Here

Disk space availability

Daily news

- 20 June 2006: Mail from Miguel Branco (DDM responsible)

Today we started deploying DQ2 on the remaining T1 sites (not all

sites still available).

Attached is the result of a (nice) ramp up, easily beating SC3's

record (on the 1st day of export of SC4) peaking at ~ 270 MB/s. Each

'step' in the graph is an additional T1 being added to the export.

Dataset subscriptions are now slowing down and will resume tomorrow.

Our DQ2 monitoring has been turned off and we expect to have it back

tomorrow! Still a long way to go until we have a reasonable

understanding of the limiting factors..

- 22 June: General power cut at CERN at 2:pm.

- 24 June : Dataset T0.D.run000949.ESD transfered from Lyon to LAL and TOKYO. Tranfering the same dataset to LAPP and LPC failed because these sites have same domain name (*.in2p3.fr) as Lyon.

- 25 June : Almost no transfer from CERN to T1s during the week-end.

- 26 June : SC4 transfers restarted with working DDM monitoring. Successfull transfers to LAL, SACLAY and TOKYO. Technical problem (domain name) for LAPP, LPNHE and LPC : under investigation. Contact BEIJING.

.

- 28 June :

- Problem of domain name solved for LAPP, LPC and LPNHE. First transfers to these sites have been done.

- Increase the number of LFC connection to 40 (advice from CERN-IT and DDM).

AODs were transfered to all T2s associated to Lyon except BEIJING (looks like a FTS problem)

- Transfer all AODs to LAL. Problems to transfer AODs to LAPP (one dcache server crashed)

- 29 June :

- Transfer of AODs to TOKYO

- 1 July

- Test transfers from LYONDISK to T2s

- 5 July :

- First DDM transfer to BEIJING