Difference between revisions of "Atlas:SC4"

(→VOBOX Configuration) |

() |

||

| (24 intermediate revisions by 9 users not shown) | |||

| Ligne 17: | Ligne 17: | ||

** Clean-up the files (?) | ** Clean-up the files (?) | ||

* Other roles | * Other roles | ||

| − | ** ATLAS (S. Jézéquel) : Initiate T1->T2 transfers | + | ** ATLAS (S. Jézéquel, G. Rahal) : Initiate T1->T2 transfers |

== Information from DDM monitoring == | == Information from DDM monitoring == | ||

* [http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/ Main DDM monitoring page] | * [http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/ Main DDM monitoring page] | ||

| − | * http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/rrd/plots/stack4h.png | + | * http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/rrd/plots/stack4h.png http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/rrd/plots/stack4ht2.png |

| − | |||

---- | ---- | ||

* http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/rrd/plots/stackday.png | * http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/rrd/plots/stackday.png | ||

| + | * http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/rrd/plots/stackdayLYON.png | ||

* http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/rrd/plots/stackdayt2.png | * http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/rrd/plots/stackdayt2.png | ||

| Ligne 33: | Ligne 33: | ||

* http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/rrd/plots/stackweek.png | * http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/rrd/plots/stackweek.png | ||

| + | * http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/rrd/plots/stackweekLYON.png | ||

* http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/rrd/plots/stackweekt2.png | * http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/rrd/plots/stackweekt2.png | ||

| Ligne 48: | Ligne 49: | ||

* [http://atldq02.cern.ch:8000/dq2/site_monitor/sites Detailed information on each site ] | * [http://atldq02.cern.ch:8000/dq2/site_monitor/sites Detailed information on each site ] | ||

| + | |||

| + | * [http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/sitestates.php?site=LYON File transfer state in LYON ] | ||

== Information from FTS monitoring == | == Information from FTS monitoring == | ||

| Ligne 58: | Ligne 61: | ||

** 15 concurrent files and 10 streams for LYON-TOKYO | ** 15 concurrent files and 10 streams for LYON-TOKYO | ||

** 5 concurrent files and 5 streams for LYON-BEIJING (SE not enough powerful for 15/15 ) | ** 5 concurrent files and 5 streams for LYON-BEIJING (SE not enough powerful for 15/15 ) | ||

| − | ** 10 concurrent files and 10 streams for LYON-French T2s (LAL | + | ** 10 concurrent files and 10 streams for LYON-French T2s (LAL, LPNHE, LPC, SACLAY) except for LAPP (5 concurrent files and 1 stream) |

== Information from dCache monitoring (provided by Lyon) == | == Information from dCache monitoring (provided by Lyon) == | ||

*[http://cctools.in2p3.fr/dcache/transfers/atlas-sc4.html Monitoring of dcache for SC4 areas] | *[http://cctools.in2p3.fr/dcache/transfers/atlas-sc4.html Monitoring of dcache for SC4 areas] | ||

| − | * LYONDISK : 25 concurrent access maximum | + | * LYONDISK : 25 gridftp concurrent access maximum |

| − | * LYONTAPE : 10 concurrent access maximum | + | * LYONTAPE : 10 gridft concurrent access maximum |

== VOBOX Configuration == | == VOBOX Configuration == | ||

| Ligne 70: | Ligne 73: | ||

* 4 processors 3 GHz | * 4 processors 3 GHz | ||

* 4 GB of memory ( 2 GB dedicated to SWAP) | * 4 GB of memory ( 2 GB dedicated to SWAP) | ||

| + | * The monitoring of the Vobox daily, weekly and monthly can be found [http://atlas-france.in2p3.fr/Activites/Informatique/OutilsCC/VO-cclcgatlas Here] | ||

== Disk space availability == | == Disk space availability == | ||

| + | * [http://gridice2.cnaf.infn.it:50080/gridice/site/site_details.php?siteName=BEIJING-LCG2&visibility=SE BEIJING] | ||

| + | * [http://gridice2.cnaf.infn.it:50080/gridice/site/site_details.php?siteName=CPPM-LCG2&visibility=SE CPPM] | ||

* [http://gridice2.cnaf.infn.it:50080/gridice/site/site_details.php?siteName=GRIF&visibility=SE GRIF] | * [http://gridice2.cnaf.infn.it:50080/gridice/site/site_details.php?siteName=GRIF&visibility=SE GRIF] | ||

* [http://gridice2.cnaf.infn.it:50080/gridice/site/site_details.php?siteName=IN2P3-LPC&visibility=SE LPC] | * [http://gridice2.cnaf.infn.it:50080/gridice/site/site_details.php?siteName=IN2P3-LPC&visibility=SE LPC] | ||

* [http://gridice2.cnaf.infn.it:50080/gridice/site/site_details.php?siteName=IN2P3-LAPP&visibility=SE LAPP] | * [http://gridice2.cnaf.infn.it:50080/gridice/site/site_details.php?siteName=IN2P3-LAPP&visibility=SE LAPP] | ||

* [http://gridice2.cnaf.infn.it:50080/gridice/site/site_details.php?siteName=TOKYO-LCG2&visibility=SE TOKYO] | * [http://gridice2.cnaf.infn.it:50080/gridice/site/site_details.php?siteName=TOKYO-LCG2&visibility=SE TOKYO] | ||

| + | * [http://gridice2.cnaf.infn.it:50080/gridice/site/site_details.php?siteName=IN2P3-CC&visibility=SE LYON] | ||

== Daily news == | == Daily news == | ||

| Ligne 82: | Ligne 89: | ||

* [https://uimon.cern.ch/twiki/bin/view/Atlas/DDMSc4#Daily_log SC4 Data Managment Daily log] | * [https://uimon.cern.ch/twiki/bin/view/Atlas/DDMSc4#Daily_log SC4 Data Managment Daily log] | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

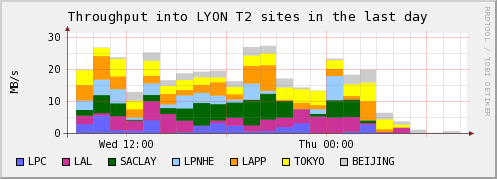

| − | * | + | * SC4 transfers from LYON to T2s: |

| − | ** | + | ** Simultaneous transfers to the 7 T2 sites for more than 24 hours; it reached more than 25MB/s |

| − | [[Image: | + | **[[Image:StackdayLYON-27-7.png]] |

| + | == Post-mortem DDM meeting == | ||

| + | * [http://indico.cern.ch/conferenceDisplay.py?confId=4959 Presentation at CERN (M. Branco) showing the results of the SC4 tests.] (1 August 2006) | ||

| − | + | == [[Atlas | Page principale du Twiki ATLAS]] == | |

| − | |||

Latest revision as of 15:20, 1 octobre 2006

Sommaire

- 1 Bienvenue sur la page Atlas SC4 LCG-France Welcome to the LCG-France Atlas SC4 page

- 2 Information from DDM monitoring

- 3 Information from FTS monitoring

- 4 Information from dCache monitoring (provided by Lyon)

- 5 VOBOX Configuration

- 6 Disk space availability

- 7 Daily news

- 8 Post-mortem DDM meeting

- 9 Page principale du Twiki ATLAS

Bienvenue sur la page Atlas SC4 LCG-France

Welcome to the LCG-France Atlas SC4 page

Compte rendu de la réunion SC4 ATLAS au CERN du 9 Juin (S.Jézéquel, G. Rahal) (written in french)

- T0 Role(CERN)

- Produce dummy files with 1 to 2 GB size(RAW, ESD et AOD) (see T0 Twiki)

- Initiate T0->T1 transfers

- FTS server sents files to Lyon choosing between 'TAPE' (RAW 43,2 Mo/s) or 'DISK' (ESD,AOD 23+20 Mo/s) areas

- T1 Role (CCIN2P3)

- Get files from T0 (dedicated dcache area: L. Schwarz)

- Provides LFC (lfc-atlas.in2p3.fr) and FTS service (cclcgftsli01.in2p3.fr) (D. Bouvet)

- Send all AODs to each T2 (20 Mo/s) using Lyon FTS server

- Regurlarly cleanup files

- T2 Role (BEIJING, LAL, LAPP, LPC, LPNHE, SACLAY, TOKYO)

- Get files from T1 (Lyon). Files on the T2 are written in /home/atlas/sc4tier0/...

- Clean-up the files (?)

- Other roles

- ATLAS (S. Jézéquel, G. Rahal) : Initiate T1->T2 transfers

Information from DDM monitoring

- http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/rrd/plots/stack4h.png http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/rrd/plots/stack4ht2.png

- http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/rrd/plots/stackday.png

- http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/rrd/plots/stackdayLYON.png

- http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/rrd/plots/stackdayt2.png

- http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/rrd/plots/stackweek.png

- http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/rrd/plots/stackweekLYON.png

- http://atlas-ddm-monitoring.web.cern.ch/atlas-ddm-monitoring/rrd/plots/stackweekt2.png

--

- Transfer rates for LYON and associated T2s (validated files by DDM):

Information from FTS monitoring

- T1->T2 :

- 15 concurrent files and 10 streams for LYON-TOKYO

- 5 concurrent files and 5 streams for LYON-BEIJING (SE not enough powerful for 15/15 )

- 10 concurrent files and 10 streams for LYON-French T2s (LAL, LPNHE, LPC, SACLAY) except for LAPP (5 concurrent files and 1 stream)

Information from dCache monitoring (provided by Lyon)

- Monitoring of dcache for SC4 areas

- LYONDISK : 25 gridftp concurrent access maximum

- LYONTAPE : 10 gridft concurrent access maximum

VOBOX Configuration

- 4 processors 3 GHz

- 4 GB of memory ( 2 GB dedicated to SWAP)

- The monitoring of the Vobox daily, weekly and monthly can be found Here

Disk space availability

Daily news

- SC4 transfers from LYON to T2s: