Difference between revisions of "Atlas:Analysis HC beyond STEP09"

(→Recent talks & Documents) |

(→Recent talks & Documents) |

||

| Ligne 110: | Ligne 110: | ||

== Recent talks & Documents == | == Recent talks & Documents == | ||

| − | * [http://lcg.in2p3.fr/wiki/index.php/Image:0910-ELancon-frontier-test.pdf Some conclusions about DB access HC tests (663 and 720)] | + | * [http://lcg.in2p3.fr/wiki/index.php/Image:0910-ELancon-frontier-test.pdf Some conclusions about DB access HC tests (663 and 720)] by Eric Lançon (28/10/09) |

* [http://indico.in2p3.fr/getFile.py/access?contribId=6&sessionId=30&resId=0&materialId=slides&confId=2110 ATLAS : from STEP09 towards first beams] Graeme Stewart's talk@Journées Grille France (16 October 2009) | * [http://indico.in2p3.fr/getFile.py/access?contribId=6&sessionId=30&resId=0&materialId=slides&confId=2110 ATLAS : from STEP09 towards first beams] Graeme Stewart's talk@Journées Grille France (16 October 2009) | ||

* [http://indico.cern.ch/getFile.py/access?contribId=8&sessionId=2&resId=0&materialId=slides&confId=66012 Summary of HammerCloud Tests since STEP09] Dan van der Ster's talk@ATLAS Jamboree T1/T2/T3 (13 October 2009) | * [http://indico.cern.ch/getFile.py/access?contribId=8&sessionId=2&resId=0&materialId=slides&confId=66012 Summary of HammerCloud Tests since STEP09] Dan van der Ster's talk@ATLAS Jamboree T1/T2/T3 (13 October 2009) | ||

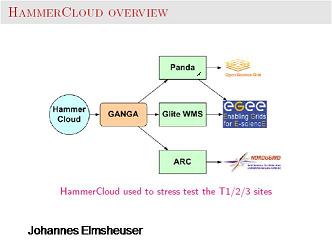

* [http://indico.cern.ch/getFile.py/access?contribId=31&sessionId=16&resId=0&materialId=slides&confId=50976 HammerCloud Plans] Johannes Elmsheuser's talk@ATLAS S&C Week (2 September 2009) | * [http://indico.cern.ch/getFile.py/access?contribId=31&sessionId=16&resId=0&materialId=slides&confId=50976 HammerCloud Plans] Johannes Elmsheuser's talk@ATLAS S&C Week (2 September 2009) | ||

Version du 10:25, 10 novembre 2009

--Chollet 15:56, 19 octobre 2009 (CEST)

Distributed Analysis Stress Tests - HammerCloud beyond STEP09

Sommaire

Lessons learnt from STEP09

- Sites may identify reasonable amount of analysis they can assume and set hard limits on number of analysis running jobs

- Balancing data across many disk servers is essential.

- Very high i/o required by analysis (5 MB/s per job). Sites should review LAN architecture to avoid bottlenecks.

Results

- ATLAS HC Tests results : http://gangarobot.cern.ch/hc/ (New web interface!)

- ATLAS STEP09 summary : http://gangarobot.cern.ch/st/step09summary.html

ATLAS Info & Contacts

- Information via mailing list ATLAS-LCG-OP-L@in2p3.fr

- LPC : Nabil Ghodbane - Nabil.Ghodbane@cern.ch

- LAL : Nicolas Makovec

- LAPP : Stéphane Jézéquel

- CPPM : Emmanuel Le Guirriec

- LPSC : Sabine Crepe

- LPNHE : Tristan Beau

- CC-T2 : Catherine, Ghita

- IRFU : Nathalie Besson

HC Tests

Objectives

- Improve Cloud readiness by following site&ATLAS problems week by week (SL5 migration, site upgrades)

- Identify best data access method per site by comparing the event rate and CPU/Walltime

https://twiki.cern.ch/twiki/bin/view/Atla/HammerCloudDataAccess#FR_cloud

- Exercise Analysis with Conditions DB access (see where squid caching is needed) and Tag analysis

Data Access methods

Multiple data access methods are exercised

- via Panda : A copy-to-WN access mode using rfcp is used (xrootd in ANALY-LYON)

- via gLite WMS : 2 data access modes available

- DQ2_LOCAL mode is a direct access mode using rfio or dcap

- FILE_STAGER mode : data staged in by a dedicated thread running in // with Athena

Week 40

29/09/09 Test 649

- Muon Analysis (Release 15.3.1)

- Input DS (STEP09) : mc08.*merge.AOD.e*_s*_r6*tid*

- via Panda (mode copy-to-WN using ddcp/rfcp - xrootd in ANALY-LYON)

- http://gangarobot.cern.ch/hc/649/test/

Bad efficiency - all sites affected all sites Failed jobs with error : exit code 1137 Put error: Error in copying the file from job workdir to localSE due to LFC ACL problem : write permissions in /grid/atlas/users/pathena for pilot jobs /atlas/Role=pilot and /atlas/fr/Role=pilot (newly activated)

30/09/09 Test 652, 653, 656, 657

- Muon Analysis (Release 15.3.1)

- Input DS (STEP09) : mc08.*merge.AOD.e*_s*_r6*tid*

- via WMS

- DQ2_LOCAL mode or direct access dcap/rfio : http://gangarobot.cern.ch/hc/652/test/

- DQ2_LOCAL mode or direct access dcap/rfio : http://gangarobot.cern.ch/hc/656/test/

- FILE_STAGER mode : http://gangarobot.cern.ch/hc/653/test/

- FILE_STAGER mode : http://gangarobot.cern.ch/hc/657/test/

http://lcg.in2p3.fr/wiki/images/ATLAS-HC300909.gif

Week 41

08/10/09 Test 663

- Cosmics DPD Analysis (Release 15.5.0)

- Input DS - DATADISK : data09_cos.*.DPD*

- Cond DB access to Oracle in Lyon T1

- via Panda (mode copy-to-WN using ddcp/rfcp - xrootd in ANALY-LYON)

- http://gangarobot.cern.ch/hc/663/test/

- Sites problems or downtime :

- LAL : downtime

- RO : DS unavailable

- LYON (T2) : release 15.5.0 unavalaible. No performances comparison possible with other T2s

- TOKYO, BEIJING : Poor performance for foreign sites compared to french sites. Squid installation in progress. Tests to be performed afterwards

- IRFU, LAPP, LPNHE : 2 peak structure observed on Nb events/Athena(s)

http://lcg.in2p3.fr/wiki/images/HC663-081009-GRIF-Irfu-CPU.png

http://lcg.in2p3.fr/wiki/images/HC663-081009-GRIF-Irfu-rate.png

http://lcg.in2p3.fr/wiki/images/HC663-081009-GRIF-Irfu-EventsAthena.png

http://lcg.in2p3.fr/wiki/images/HC663-081009-Tokyo-CPU.png

http://lcg.in2p3.fr/wiki/images/HC663-081009-Tokyo-rate.png

http://lcg.in2p3.fr/wiki/images/HC663-081009-Tokyo-EventsAthena.png

Week 42

15/10/09 Test 682

- Muon Analysis (Release 15.3.1)

- Input DS (STEP09) : mc08.*merge.AOD.e*_s*_r6*tid*

- via Panda (mode copy-to-WN using ddcp/rfcp - xrootd in ANALY-LYON)

- http://gangarobot.cern.ch/hc/682/test/

- Sites problems or results to be followed-up :

- GRIF-IRFU : failures due to release 15.3.1 installation (has run fine last week but needed to be patched)

gcc version 4.1.2 used instead of gcc 3.4... See GGUS ticket 52483

- LYON (T2) : both queues ANALY_LYON (xrootd) and ANALY_LYON_DCACHE exercised at the same time

limitation due to BQS resource (u_xrootd_lhc) ANALY_LYON_DCACHE : limited number of jobs but good performances (effect of dcache upgrade ?) ANALY_LYON : many failures - problems followed by J.Y Nief (root version used by ATLAS ?

Week 43

21/10/09 Test 720

- Cosmic DPDs Analysis (Release 15.5.0)

- Input DS - DATADISK : data09_cos.*.DPD*

- Dedicated test to Tokyo and Beijing with access via Squid

- via Panda (mode copy-to-WN using ddcp/rfcp)

- http://gangarobot.cern.ch/hc/720/test/

http://lcg.in2p3.fr/wiki/images/HC720-211009-Tokyo-CPU.png http://lcg.in2p3.fr/wiki/images/HC720-211009-Tokyo-rate.png http://lcg.in2p3.fr/wiki/images/HC720-211009-Tokyo-EventsAthena.png

Recent talks & Documents

- Some conclusions about DB access HC tests (663 and 720) by Eric Lançon (28/10/09)

- ATLAS : from STEP09 towards first beams Graeme Stewart's talk@Journées Grille France (16 October 2009)

- Summary of HammerCloud Tests since STEP09 Dan van der Ster's talk@ATLAS Jamboree T1/T2/T3 (13 October 2009)

- HammerCloud Plans Johannes Elmsheuser's talk@ATLAS S&C Week (2 September 2009)