Difference between revisions of "Atlas:StorageRequirements"

(→Summary table for Space token and Name space) |

(→ATLASDATADISK) |

||

| (130 intermediate revisions by the same user not shown) | |||

| Ligne 1: | Ligne 1: | ||

= Introduction = | = Introduction = | ||

| + | Sources of information: | ||

| + | * Now ATLAS has prepared a page https://twiki.cern.ch/twiki/bin/view/Atlas/StorageSetUp | ||

| + | * https://twiki.cern.ch/twiki/bin/view/Atlas/Step09 | ||

| + | * Presentations at [http://indico.cern.ch/conferenceDisplay.py?confId=45475&view=standard&showDate=all&showSession=all&detailLevel=contribution GDB (13 May 2009)] and [http://indico.in2p3.fr/contributionDisplay.py?contribId=0&sessionId=3&confId=1660 Réunion LCG-France (18-19 May 2009)] | ||

| + | * Presentations at ATLAS Tier-1/2/3 Jamboree (28 August 2008) [http://indico.cern.ch/conferenceDisplay.py?confId=38738] | ||

| + | |||

| + | |||

| + | Some comments about the contents | ||

| + | |||

* Information only for T2s (and T3s). | * Information only for T2s (and T3s). | ||

* ACL information only for DPM for the moment. | * ACL information only for DPM for the moment. | ||

| − | * | + | * See the slides |

| − | ** | + | ** LCG-France T2-T3 Technical meeting (20 June 2008) [http://indico.in2p3.fr/materialDisplay.py?contribId=4&materialId=slides&confId=808] |

| + | ** LCG-France T2-T3 Technical meeting (18 July 2008) [http://indico.in2p3.fr/conferenceDisplay.py?confId=809] | ||

* The requirements for ATLASGROUPDISK is temporary due to a technical limitation in DPM. Atlas has contacted DPM developpers. The proper implementation is expected to be available in September. | * The requirements for ATLASGROUPDISK is temporary due to a technical limitation in DPM. Atlas has contacted DPM developpers. The proper implementation is expected to be available in September. | ||

| − | |||

* Site admin's are requested to create at least the space tokens. The namespace directories can be left to atlas production if the top directory is properly set up so that <code>/atlas/Role=production</code> is allowed to execute dpns-mkdir and dpns-setacl remotely. (Of course they are welcome to create them by themselves). | * Site admin's are requested to create at least the space tokens. The namespace directories can be left to atlas production if the top directory is properly set up so that <code>/atlas/Role=production</code> is allowed to execute dpns-mkdir and dpns-setacl remotely. (Of course they are welcome to create them by themselves). | ||

| Ligne 16: | Ligne 25: | ||

* It is suggested at LAPP to give ''write'' permission to <code>atlas/Role=lcgadmin</code> everywhere, so that the ATLAS admin can control the storage. (less people in <code>Role=lcgadmin</code> than in than in <code>/atlas/Role=production</code>). Stephane will discuss this issue with ATLAS. | * It is suggested at LAPP to give ''write'' permission to <code>atlas/Role=lcgadmin</code> everywhere, so that the ATLAS admin can control the storage. (less people in <code>Role=lcgadmin</code> than in than in <code>/atlas/Role=production</code>). Stephane will discuss this issue with ATLAS. | ||

| − | + | = Space Requirements = | |

== ATLAS top directory == | == ATLAS top directory == | ||

* On this page the top directory for ATLAS is represented by <code>.../atlas</code>. Each site should replace it according to their SE configuration. | * On this page the top directory for ATLAS is represented by <code>.../atlas</code>. Each site should replace it according to their SE configuration. | ||

| Ligne 26: | Ligne 35: | ||

== Summary table for Space token and Name space == | == Summary table for Space token and Name space == | ||

| + | |||

| + | * The size in < > is for a typical T2 with ~500 CPUs and ~100 TB disk | ||

{| border="1" width="100%" | {| border="1" width="100%" | ||

| Ligne 35: | Ligne 46: | ||

| [[#ATLASDATADISK|ATLASDATADISK]] | | [[#ATLASDATADISK|ATLASDATADISK]] | ||

| '''<code>/atlas/Role=production</code>''' | | '''<code>/atlas/Role=production</code>''' | ||

| − | | [[#ATLASDATADISK|see below*]] | + | | not known yet <br>[[#ATLASDATADISK|see below*]] |

| '''<code>.../atlas/atlasdatadisk</code>''' | | '''<code>.../atlas/atlasdatadisk</code>''' | ||

write permission only to <code>/atlas/Role=production</code> | write permission only to <code>/atlas/Role=production</code> | ||

| Ligne 41: | Ligne 52: | ||

| [[#ATLASMCDISK|ATLASMCDISK]] | | [[#ATLASMCDISK|ATLASMCDISK]] | ||

| '''<code>/atlas/Role=production</code>''' | | '''<code>/atlas/Role=production</code>''' | ||

| − | | | + | | a share of 60TB (plus possible extra) <br>[[#ATLASMCDISK|see below*]] |

| '''<code>.../atlas/atlasmcdisk</code>''' <br> write permission only to <code>atlas/Role=production</code> | | '''<code>.../atlas/atlasmcdisk</code>''' <br> write permission only to <code>atlas/Role=production</code> | ||

|----- | |----- | ||

| [[#ATLASPRODDISK|ATLASPRODDISK]] | | [[#ATLASPRODDISK|ATLASPRODDISK]] | ||

| '''<code>/atlas/Role=production</code>''' | | '''<code>/atlas/Role=production</code>''' | ||

| − | | 2TB ([[#ATLASPRODDISK|see below*]]) | + | | <2TB>, (or 6GB/kSI2K) <br>([[#ATLASPRODDISK|see below*]]) |

| '''<code>.../atlas/atlasproddisk</code>''' <br> write permission only to <code>/atlas/Role=production</code> | | '''<code>.../atlas/atlasproddisk</code>''' <br> write permission only to <code>/atlas/Role=production</code> | ||

|----- | |----- | ||

| [[#ATLASGROUPDISK|ATLASGROUPDISK]] | | [[#ATLASGROUPDISK|ATLASGROUPDISK]] | ||

| '''<code>/atlas</code>''' (temporary solution. [[#ATLASGROUPDISK|see below*]]) | | '''<code>/atlas</code>''' (temporary solution. [[#ATLASGROUPDISK|see below*]]) | ||

| − | | | + | | a share of 30TB<br>([[#ATLASGROUPDISK|see below*]]) |

| '''<code>.../atlas/atlasgroupdisk</code>''' <br> write permission to <code>/atlas/Role=production</code> | | '''<code>.../atlas/atlasgroupdisk</code>''' <br> write permission to <code>/atlas/Role=production</code> | ||

<br>'''<code>.../atlas/atlasgroupdisk/$GROUP</code>''' <br> write permission to <code>/atlas/Role=production</code> and <code>/atlas/$GROUP/Role=production</code> | <br>'''<code>.../atlas/atlasgroupdisk/$GROUP</code>''' <br> write permission to <code>/atlas/Role=production</code> and <code>/atlas/$GROUP/Role=production</code> | ||

<br><br>($GROUP: [[#ATLASGROUPDISK|see below*]]) | <br><br>($GROUP: [[#ATLASGROUPDISK|see below*]]) | ||

|----- | |----- | ||

| − | | [[#ATLASUSERDISK|ATLASUSERDISK]] | + | | [[#ATLASUSERDISK|ATLASUSERDISK]]<br>([https://twiki.cern.ch/twiki/bin/view/Atlas/StorageSetUp#The_ATLASUSERDISK_space_token Atlas StorageSetUp]) |

| '''<code>/atlas</code>''' | | '''<code>/atlas</code>''' | ||

| − | | 5TB ([[#ATLASUSERDISK|see below*]]) | + | | <5TB> <br>([[#ATLASUSERDISK|see below*]]) |

| − | | '''<code>.../atlas/ | + | | '''<code>.../atlas/atlasuserdisk</code>''' <br> write permission to all ATLAS users<br>write permission to <code>/atlas/Role=production</code> for central deletion <br><span style="color:#FF0000;">the same for all the subdirectories</span> |

|----- | |----- | ||

| − | | [[#ATLASLOCALGROUPDISK|ATLASLOCALGROUPDISK]] | + | | [[#ATLASSCRATCHDISK|ATLASSCRATCHDISK]]<br>([https://twiki.cern.ch/twiki/bin/view/Atlas/StorageSetUp#The_ATLASSCRATCHDISK_space_token Atlas StorageSetUp]) |

| − | | '''<code>/atlas/<locality></code>''', <locality>=fr,ro,cn,jp,... | + | | '''<code>/atlas</code>''' |

| + | | <5TB> <br>([[#ATLASSCRATCHDISK|see below*]]) | ||

| + | | '''<code>.../atlas/atlasscratchdisk</code>''' <br> write permission to all ATLAS users<br>write permission to <code>/atlas/Role=production</code> for central deletion <br><span style="color:#FF0000;">the same for all the subdirectories</span> | ||

| + | |----- | ||

| + | | [[#ATLASLOCALGROUPDISK|ATLASLOCALGROUPDISK]]<br>([https://twiki.cern.ch/twiki/bin/view/Atlas/StorageSetUp#The_ATLASLOCALGROUPDISK_space_to Atlas StorageSetUp]) | ||

| + | | '''<code>/atlas/<locality></code>''', <locality>=fr,ro,cn,jp,...<br>'''<span style="color:#FF0000;"><code>/atlas</code></span>''' | ||

| sites to decide | | sites to decide | ||

| − | | '''<code>.../atlas/ | + | | '''<code>.../atlas/atlaslocalgroupdisk</code>''' <br> write permission to local ATLAS users (<code>/atlas/<locality></code>)<br>write permission to <code>/atlas/Role=production</code> for central deletion |

|} | |} | ||

| Ligne 73: | Ligne 89: | ||

* The spacetoken ATLASENDUSER, which was requested for ccrc08/fdr, is not required any longer, being replaced with ATLASUSERDISK. It can be removed. | * The spacetoken ATLASENDUSER, which was requested for ccrc08/fdr, is not required any longer, being replaced with ATLASUSERDISK. It can be removed. | ||

| + | |||

| + | === STEP09 requirements === | ||

| + | presentation at [[http://indico.cern.ch/materialDisplay.py?contribId=5&sessionId=3&materialId=slides&confId=45475|GDB 13 May 2009]] | ||

| + | |||

| + | * Total data export T1-T2(for 100%): 112 TB, 92.6MB/s | ||

| + | |||

| + | <ul> | ||

| + | {| border="1" width="100%" | ||

| + | | | ||

| + | | T0 AOD+DPD (0.2MB/event each) | ||

| + | | reprocessing AOD+DPD | ||

| + | | data total | ||

| + | | MC prod | ||

| + | | MC repro | ||

| + | | MC input for analysis | ||

| + | | output of analysis | ||

| + | | comment | ||

| + | |----- | ||

| + | | 200Hz trigger | ||

| + | | 46.3MB/s (average) | ||

| + | | 46.3MB/s | ||

| + | | 92.6MB/s | ||

| + | |----- | ||

| + | | 50ksec /day | ||

| + | | 4TB/day | ||

| + | | 4TB/day | ||

| + | | 8TB/day | ||

| + | |----- | ||

| + | | STEP09 (2 weeks) | ||

| + | | 56TB | ||

| + | | 56TB | ||

| + | | 112TB | ||

| + | | 3.5TB | ||

| + | | 3.5TB | ||

| + | | 50TB | ||

| + | |} | ||

| + | </ul> | ||

| + | |||

| + | * T2 Space Token Summary | ||

| + | <ul> | ||

| + | {| border="1" width="100%" | ||

| + | | space token | ||

| + | | space fraction | ||

| + | |----- | ||

| + | | [[#ATLASDATADISK|ATLASDATADISK]] | ||

| + | | 30% | ||

| + | |----- | ||

| + | | [[#ATLASMCDISK|ATLASMCDISK]] | ||

| + | | 25% | ||

| + | |----- | ||

| + | | [[#ATLASPRODDISK|ATLASPRODDISK]] | ||

| + | | 5% | ||

| + | |----- | ||

| + | | [[#ATLASGROUPDISK|ATLASGROUPDISK]] | ||

| + | | 20% | ||

| + | |----- | ||

| + | | [[#ATLASSCRATCHDISK|ATLASSCRATCHDISK]] | ||

| + | | 20% | ||

| + | |----- | ||

| + | | [[#ATLASLOCALGROUPDISK|ATLASLOCALGROUPDISK]] | ||

| + | | no requirement | ||

| + | |} | ||

| + | </ul> | ||

== ATLASDATADISK == | == ATLASDATADISK == | ||

| − | * | + | * Total estimation of size comes later |

| + | |||

| + | Reprocessing Aug 09 | ||

| + | ESD 83TB, AOD 7TB, DPD 13TB, HIST/NTUP/LOG: 5TB, TOTAL 108TB | ||

| + | |||

| + | Fast reprocessing of Cosmic Jun09 | ||

| + | ESD 24.5TB | ||

| + | AOD 1.8TB | ||

| + | DPD 6.2TB | ||

| + | |||

| + | Cosmic Jun 09 | ||

| + | ~300 TB RAW | ||

| + | ~100 TB ESD, AOD, DPD | ||

| + | |||

| + | - From first two days, no DPD merging: | ||

| + | Type #files avg size [MB] total x7 | ||

| + | ------------------------------------ | ||

| + | RAW 13278 2020 25.6 TB 180 TB | ||

| + | ESD 10211 240 2.3 TB 16 TB | ||

| + | AOD 586 206 0.12 TB 0.8 TB | ||

| + | DPD 14017 57 0.76 TB 5.3 TB | ||

| + | |||

| + | Spring09 reprocessing | ||

| + | ESD 83TB | ||

| + | |||

| + | * Reprocessing Aug09 (30 July 2009) http://indico.cern.ch/materialDisplay.py?contribId=5&materialId=slides&confId=65114 | ||

| + | * Cosmic Jun09 http://indico.cern.ch/getFile.py/access?contribId=8&resId=0&materialId=1&confId=62496 | ||

| + | |||

| + | |||

| + | fast reprocessing of june cosmic data | ||

| + | 57 runs, 338 datasets, 145660 files, 295.4TB | ||

| + | https://twiki.cern.ch/twiki/bin/view/Atlas/DataPreparationReprocessing#Fast_reprocessing_of_June_July_2 | ||

| + | http://indico.cern.ch/materialDisplay.py?contribId=4&materialId=slides&confId=64069 | ||

| + | |||

* VOMS group associated with the space: /atlas/Role=production | * VOMS group associated with the space: /atlas/Role=production | ||

| − | * Namespace directory to be created: | + | * Namespace directory to be created: $DPNS_HOME/atlas/atlasdatadisk |

** Normally, sites have already this namespace created. | ** Normally, sites have already this namespace created. | ||

* Namespace ACL: writable by only atlas/Role=production, readable by all ATLAS users | * Namespace ACL: writable by only atlas/Role=production, readable by all ATLAS users | ||

| Ligne 95: | Ligne 207: | ||

== ATLASMCDISK == | == ATLASMCDISK == | ||

| + | This is the space where simulated data produced centrally go in. | ||

* 15TB for a typical T2 with ~500 CPU?fs and ~100 TB disk | * 15TB for a typical T2 with ~500 CPU?fs and ~100 TB disk | ||

** 60TB for a T2 requesting for 100% AOD. | ** 60TB for a T2 requesting for 100% AOD. | ||

| − | ** The size | + | ** The size will increase in case D1PD are requested by the site. (100% D1PD will be 30TB) |

| + | ** The required space is 1/3 of the above for the period until Sep 2008, 2/3 for Sep - Dec 2008, and full for Jan - Mar 2009. | ||

| + | ** using the share at ccrc08, the required size for each FR sites are; | ||

| + | <ul><ul> | ||

| + | <pre> | ||

| + | Required Space at | ||

| + | Site Share Jul08 Sep08 Dec08 Possibly with D1PD | ||

| + | IN2P3-LAPP 25% 5 TB 10 TB 15 TB 22.5 TB | ||

| + | IN2P3-CPPM 5% 1 TB 2 TB 3 TB 4.5 TB | ||

| + | IN2P3-LPSC 5% 1 TB 2 TB 3 TB 4.5 TB | ||

| + | IN2P3-LPC 25% 5 TB 10 TB 15 TB 22.5 TB | ||

| + | BEIJING-LCG2 20% 4 TB 8 TB 12 TB 18 TB | ||

| + | RO-07-NIPNE 10% 2 TB 4 TB 6 TB 9 TB | ||

| + | RO-02-NIPNE 10% 2 TB 4 TB 6 TB 9 TB | ||

| + | GRIF-LAL 45% 9 TB 18 TB 27 TB 40.5 TB | ||

| + | GRIF-LPNHE 25% 5 TB 10 TB 15 TB 22.5 TB | ||

| + | GRIF-SACLAY 30% 6 TB 12 TB 18 TB 27 TB | ||

| + | TOKYO-LCG2 100% 20 TB 40 TB 60 TB 90 TB | ||

| + | </pre> | ||

| + | </ul></ul> | ||

* VOMS group associated with the space: /atlas/Role=production | * VOMS group associated with the space: /atlas/Role=production | ||

| − | * Namespace directory to be created: <code>/atlas/atlasmcdisk</code> | + | * Namespace directory to be created: <code>$DPNS_HOME/atlas/atlasmcdisk</code> |

** Normally, sites have already this namespace created. | ** Normally, sites have already this namespace created. | ||

* Namespace ACL: writable by only atlas/Role=production, readable by all ATLAS users | * Namespace ACL: writable by only atlas/Role=production, readable by all ATLAS users | ||

| Ligne 117: | Ligne 249: | ||

== ATLASPRODDISK == | == ATLASPRODDISK == | ||

| − | * 2TB for a typical T2 with ~500 | + | This is the space used by the ATLAS production system (panda) for putting input files for the jobs to run on the site, downloading them from T1, and output files from the jobs which are to be replicated to the T1. Both input and output files are to be deleted centrally after a certain period. |

| + | * 2TB for a typical T2 with ~500 CPUs (the size is to be re-visited) | ||

** scales with the CPU capacity of the site. | ** scales with the CPU capacity of the site. | ||

** will be larger if the reconstruction jobs run on the site. | ** will be larger if the reconstruction jobs run on the site. | ||

| + | ** My private estimation is 6GB/kSI2K (400kSI2K/event for simulation, 4MB/HITS, 7days to keep). | ||

| + | ** Using the MoU value, the required space for each site is; | ||

| + | <ul><ul> | ||

| + | <pre> | ||

| + | Site kSI2K Space | ||

| + | BEIJING 200 1.2 TB | ||

| + | GRIF 800 4.8 TB (federation) | ||

| + | LAPP 440 2.6 TB | ||

| + | LPC 400 2.4 TB | ||

| + | TOKYO 1000 6 TB | ||

| + | RO 400 2.4 TB (federation) | ||

| + | </pre> | ||

| + | </ul></ul> | ||

* VOMS group associated with the space: /atlas/Role=production | * VOMS group associated with the space: /atlas/Role=production | ||

| − | * Namespace directory to be created: | + | * Namespace directory to be created: $DPNS_HOME/atlas/atlasproddisk |

* Namespace ACL: writable by only atlas/Role=production, readable by all ATLAS users | * Namespace ACL: writable by only atlas/Role=production, readable by all ATLAS users | ||

| + | <ul> | ||

<pre> | <pre> | ||

# group: atlas/Role=production | # group: atlas/Role=production | ||

| Ligne 136: | Ligne 283: | ||

default:other::r-x | default:other::r-x | ||

</pre> | </pre> | ||

| + | </ul> | ||

== ATLASGROUPDISK == | == ATLASGROUPDISK == | ||

| − | * 6TB for a typical T2 with ~500 | + | This is the space to put group DPD files, which are not created by the central production system, but by group analyses with the group production roles. |

| − | ** The size | + | * 6TB for a typical T2 with ~500 CPUs and ~100 TB disk |

| + | ** 100% D2PD will be 30TB | ||

| + | ** The required space is 1/3 of the above for the period until Sep 2008, 2/3 for Sep - Dec 2008, and full for Jan - Mar 2009. | ||

| + | ** using the share at ccrc08, the required size for each FR sites are; | ||

| + | <ul><ul> | ||

| + | <pre> | ||

| + | Required Space by | ||

| + | Site Share Jul08 Sep08 Dec08 | ||

| + | IN2P3-LAPP 25% 2.5 TB 5 TB 7.5 TB | ||

| + | IN2P3-CPPM 5% 0.5 TB 1 TB 1.5 TB | ||

| + | IN2P3-LPSC 5% 0.5 TB 1 TB 1.5 TB | ||

| + | IN2P3-LPC 25% 2.5 TB 5 TB 7.5 TB | ||

| + | BEIJING-LCG2 20% 2 TB 4 TB 6 TB | ||

| + | RO-07-NIPNE 10% 1 TB 2 TB 3 TB | ||

| + | RO-02-NIPNE 10% 1 TB 2 TB 3 TB | ||

| + | GRIF-LAL 45% 4.5 TB 9 TB 13.5 TB | ||

| + | GRIF-LPNHE 25% 2.5 TB 5 TB 7.5 TB | ||

| + | GRIF-SACLAY 30% 3 TB 6 TB 9 TB | ||

| + | TOKYO-LCG2 100% 10 TB 20 TB 30 TB | ||

| + | </pre> | ||

| + | </ul></ul> | ||

* One single space to be reserved for all the group activities ($GROUP = phys-beauty, phys-exotics, phys-gener, phys-hi, phys-higgs, phys-lumin, phys-sm, phys-susy, phys-top, perf-egamma, perf-flavtag, perf-jets, perf-muons, perf-tau, etc.) | * One single space to be reserved for all the group activities ($GROUP = phys-beauty, phys-exotics, phys-gener, phys-hi, phys-higgs, phys-lumin, phys-sm, phys-susy, phys-top, perf-egamma, perf-flavtag, perf-jets, perf-muons, perf-tau, etc.) | ||

| − | * VOMS group associated with the space: /atlas | + | * VOMS group associated with the space: <strike>/atlas</strike> <span style="color:#FF0000;">/atlas/Role=production</span> |

| − | ** A temporary solution until multiple group support to the spaces is available. | + | ** <strike>A temporary solution until multiple group support to the spaces is available. |

| − | ** Once it is available, the groups will be /atlas/Role=production and /atlas/$GROUP/Role=production for all $GROUP | + | ** Once it is available, the groups will be /atlas/Role=production and /atlas/$GROUP/Role=production for all $GROUP</strike> |

* Namespaces directory to be created and their ACLs: | * Namespaces directory to be created and their ACLs: | ||

| − | ** | + | ** $DPNS_HOME/atlas/atlasgroupdisk: writable by only atlas/Role=production, readable by all ATLAS users |

| − | ** | + | ** <strike>$DPNS_HOME/atlas/atlasgroupdisk/$GROUP: writable by atlas/Role=production and /atlas/$GROUP/Role=production, readable by all ATLAS users</strike> |

| − | ** eg. for phys-higgs | + | ** eg. for phys-higgs</strike> |

| + | |||

<ul><pre> | <ul><pre> | ||

# group: atlas/Role=production | # group: atlas/Role=production | ||

| Ligne 153: | Ligne 322: | ||

group::rwx #effective:rwx | group::rwx #effective:rwx | ||

group:atlas/Role=production:rwx #effective:rwx | group:atlas/Role=production:rwx #effective:rwx | ||

| − | |||

mask::rwx | mask::rwx | ||

other::r-x | other::r-x | ||

| Ligne 159: | Ligne 327: | ||

default:group::rwx | default:group::rwx | ||

default:group:atlas/Role=production:rwx | default:group:atlas/Role=production:rwx | ||

| − | |||

default:mask::rwx | default:mask::rwx | ||

default:other::r-x | default:other::r-x | ||

| − | </ | + | </pre> |

| − | + | </ul> | |

== ATLASUSERDISK == | == ATLASUSERDISK == | ||

| − | * 5TB for a typical T2 with ~500 | + | <span style="color:#FF0000;">This space has been decomissioned and replaced with ATLASSCRATCHDISK</span>. |

| + | This is the scratch space for users to put the output of their analysis jobs run at the site before copying them to their final destination. The files are to be deleted by central operation after a certain period of time. | ||

| + | * 5TB for a typical T2 with ~500 CPUs and ~100 TB disk | ||

* VOMS group associated with the space: /atlas | * VOMS group associated with the space: /atlas | ||

| − | * Namespace directory to be created: .../atlas/user | + | * Namespace directory to be created: .../atlas/atlasuserdisk/ (the old .../atlas/user is to be used for files without spacetoken) |

| − | * Namespace ACL: writable by all ATLAS users | + | * Namespace ACL: writable by all ATLAS users (group /atlas) and /atlas/Role=production (for central deletion). <strike>The user directories underneath should be writable only by the owner and /atlas/Role=production (for central deletion)</strike>. <span style="color:#FF0000;">all the subdirectories should follow this.</span> |

* Normally, sites have already this namespace created by user analysis jobs so far. | * Normally, sites have already this namespace created by user analysis jobs so far. | ||

* example commands | * example commands | ||

| − | ** <code>dpns-mkdir | + | ** <code>dpns-entergrpmap --group "atlas"</code> |

| − | ** <code>dpns-setacl -m g:atlas:rwx,m:rwx,d:g:atlas:r-x | + | ** <code>dpns-entergrpmap --group "atlas/Role=production"</code> |

| − | ** <code>dpns-getacl | + | ** <code>dpns-mkdir $DPNS_HOME/atlas/atlasuserdisk</code> |

| + | ** <code>dpns-mkdir $DPNS_HOME/atlas/atlasuserdisk</code> | ||

| + | ** <code>dpns-setacl -m g:atlas:rwx,m:rwx,d:g:atlas:r-x,d:m:rwx $DPNS_HOME/atlas/atlasuserdisk</code> | ||

| + | ** <code>dpns-setacl -m g:atlas/Role=production:rwx,m:rwx,d:g:atlas/Role=production:rwx,d:m:rwx $DPNS_HOME/atlas/atlasuserdisk</code> | ||

| + | ** <code>dpns-getacl $DPNS_HOME/atlas/atlasuserdisk</code> | ||

<ul><pre> | <ul><pre> | ||

# group: atlas | # group: atlas | ||

user::rwx | user::rwx | ||

| − | group::rwx | + | group::rwx |

| − | group:atlas/Role= | + | group:atlas:rwx |

| + | group:atlas/Role=lcgadmin:rwx | ||

| + | group:atlas/Role=production:rwx | ||

mask::rwx | mask::rwx | ||

other::r-x | other::r-x | ||

default:user::rwx | default:user::rwx | ||

| − | default:group:: | + | default:group::rwx |

| + | default:group:atlas:rwx | ||

| + | default:group:atlas/Role=lcgadmin:rwx | ||

default:group:atlas/Role=production:rwx | default:group:atlas/Role=production:rwx | ||

default:mask::rwx | default:mask::rwx | ||

| Ligne 189: | Ligne 366: | ||

</pre></ul> | </pre></ul> | ||

| + | == ATLASSCRATCHDISK == | ||

| + | <span style="color:#FF0000;">This space is to be deployed to replace ATLASUSERDISK</span>. | ||

| + | During the migraiton period, the both spaces should be available. The size of the space | ||

| + | is preferably the same as ATLASUSERDISK, but it is also possible to start with a small size | ||

| + | and increase it while monitoring the usage and decreasing ATLASUSERDISK. | ||

| + | |||

| + | This is the scratch space for users to put the output of their analysis jobs run at the site before copying them to their final destination. The files are to be deleted by central operation after a certain period of time. | ||

| + | * 5TB for a typical T2 with ~500 CPUs and ~100 TB disk | ||

| + | * VOMS group associated with the space: /atlas | ||

| + | * Namespace directory to be created: .../atlas/atlasscratchdisk/ | ||

| + | * Namespace ACL: writable by all ATLAS users (group /atlas) and /atlas/Role=production (for central deletion). <span style="color:#FF0000;">all the subdirectories should follow this.</span> | ||

| + | * Normally, sites have already this namespace created by user analysis jobs so far. | ||

| + | * example commands | ||

| + | ** <code>dpns-entergrpmap --group "atlas"</code> | ||

| + | ** <code>dpns-entergrpmap --group "atlas/Role=production"</code> | ||

| + | ** <code>dpns-mkdir $DPNS_HOME/atlas/atlasscratchdisk</code> | ||

| + | ** <code>dpns-mkdir $DPNS_HOME/atlas/atlasscratchdisk</code> | ||

| + | ** <code>dpns-setacl -m g:atlas:rwx,m:rwx,d:g:atlas:r-x,d:m:rwx $DPNS_HOME/atlas/atlasscratchdisk</code> | ||

| + | ** <code>dpns-setacl -m g:atlas/Role=production:rwx,m:rwx,d:g:atlas/Role=production:rwx,d:m:rwx $DPNS_HOME/atlas/atlasscratchdisk</code> | ||

| + | ** <code>dpns-getacl $DPNS_HOME/atlas/atlasscratchdisk</code> | ||

| + | <ul><pre> | ||

| + | # group: atlas | ||

| + | user::rwx | ||

| + | group::rwx | ||

| + | group:atlas:rwx | ||

| + | group:atlas/Role=lcgadmin:rwx | ||

| + | group:atlas/Role=production:rwx | ||

| + | mask::rwx | ||

| + | other::r-x | ||

| + | default:user::rwx | ||

| + | default:group::rwx | ||

| + | default:group:atlas:rwx | ||

| + | default:group:atlas/Role=lcgadmin:rwx | ||

| + | default:group:atlas/Role=production:rwx | ||

| + | default:mask::rwx | ||

| + | default:other::r-x | ||

| + | </pre></ul> | ||

== ATLASLOCALGROUPDISK == | == ATLASLOCALGROUPDISK == | ||

| + | This is a space for "local" users of the site, and is not included in the pledged resources to ATLAS. | ||

| + | * See the [https://twiki.cern.ch/twiki/bin/view/Atlas/StorageSetUp#The_ATLASLOCALGROUPDISK_space_to Atlas StorageSetUp] wiki page. | ||

* size to be decided by sites. | * size to be decided by sites. | ||

** the resources not included in the pledge. | ** the resources not included in the pledge. | ||

| − | * VOMS group associated with the space: /atlas/fr (or /atlas/ro, /atlas/cn, /atlas/jp correspondingly) | + | * VOMS group associated with the space: <strike>/atlas/fr (or /atlas/ro, /atlas/cn, /atlas/jp correspondingly)</strike> <span style="color:#FF0000;">/atlas</span> |

| − | * name space: .../atlas/fr/user (or .../atlas/ro/user, .../atlas/cn/user, .../atlas/jp/user, etc.) | + | * name space: $DPNS_HOME/atlas/atlaslocalgroupdisk (the path previously required .../atlas/fr/user (or .../atlas/ro/user, .../atlas/cn/user, .../atlas/jp/user, etc.) is to be used for files without space token) |

| − | * ACL: write permission only to /atlas/fr group (or /atlas/ro, /atlas/cn, /atlas/jp correspondingly) | + | * ACL: write permission <strike>only</strike> to /atlas/fr group (or /atlas/ro, /atlas/cn, /atlas/jp correspondingly) <span style="color:#FF0000;">and /atlas/Role=production</span> |

* example ACL: | * example ACL: | ||

<ul><pre> | <ul><pre> | ||

| − | + | # group: atlas/fr | |

| − | |||

| − | |||

| − | # group: atlas/ | ||

user::rwx | user::rwx | ||

| − | group:: | + | group::rwx |

| − | group:atlas/Role=lcgadmin:rwx | + | group:atlas/Role=lcgadmin:rwx |

| + | group:atlas/Role=production:rwx | ||

group:atlas/fr:rwx #effective:rwx | group:atlas/fr:rwx #effective:rwx | ||

mask::rwx | mask::rwx | ||

other::r-x | other::r-x | ||

default:user::rwx | default:user::rwx | ||

| − | default:group:: | + | default:group::r-x |

| + | default:group:atlas:r-x | ||

default:group:atlas/Role=lcgadmin:rwx | default:group:atlas/Role=lcgadmin:rwx | ||

| + | default:group:atlas/Role=production:rwx | ||

default:group:atlas/fr:rwx | default:group:atlas/fr:rwx | ||

default:mask::rwx | default:mask::rwx | ||

default:other::r-x | default:other::r-x | ||

</pre></ul> | </pre></ul> | ||

| + | |||

| + | = Deployment = | ||

| + | == Space Reservation deployment == | ||

| + | * The share used for STEP09 http://atladcops.cern.ch:8000/drmon/ftmon_TiersInfo.html [[Image:STEP09-FR-T2-share-used.PNG]] | ||

| + | |||

| + | |||

| + | <p> | ||

| + | 2009.05.19. (the xls file used to create this table [[Image:STEP09-FR.xls|STEP09-FR.xls]] [[Image:STEP09-FR-T2-Disk.xls|STEP09-FR-T2-Disk.xls]]) | ||

| + | * pledged capacity [[http://lcg.web.cern.ch/LCG/Resources/WLCGResources-2008-2013-05FEB2009.pdf Tier 1 & Tier 2 Pledge and Resources table (v 18 March 2009)]] | ||

| + | * shares and other assumptions same as the calculation 2009.05.18. | ||

| + | |||

| + | <ul> | ||

| + | <pre> | ||

| + | |||

| + | STEP09 Share CCRC space DATA MCDISK PROD GROUP SCRATCH USER Total LOCALGROUP | ||

| + | 112TB 112TB /112TB 30% 25% 5% 20% 20% | ||

| + | IN2P3-CC pledge 0.0 | ||

| + | deploy 212.0 454.7 11.0 2.7 4.0 684.4 1.0 | ||

| + | free 71.8 78.1 5.7 2.6 2.1 160.3 0.7 | ||

| + | IN2P3-LAPP pledge 37.0% 41.4 34.5 6.9 27.6 27.6 0.0 138.0 | ||

| + | deploy 20% 25% 6.1% 6.8 14.0 6.0 2.0 1.0 0.5 30.3 3.6 | ||

| + | free 22.4 28.0 4.0% 4.5 0.7 5.0 2.0 0.9 0.4 13.5 2.1 | ||

| + | IN2P3-LPC pledge 38.8% 43.5 36.3 7.3 29.0 29.0 0.0 145.0 | ||

| + | deploy 30% 25% 4.5% 5.0 17.0 3.0 2.0 3.0 3.0 33.0 1.0 | ||

| + | free 33.6 28.0 4.0% 4.5 12.8 2.6 2.0 2.2 3.0 27.1 0.9 | ||

| + | IN2P3-CPPM capacity 4.3% 4.9 4.1 0.8 3.2 3.2 0.0 16.2 | ||

| + | deploy 20% 5% 2.6% 2.9 8.8 2.9 0.1 1.0 0.5 16.2 0.1 | ||

| + | free 22.4 5.6 0.8% 0.9 1.1 2.5 0.1 0.9 0.5 6.0 0.1 | ||

| + | IN2P3-LPSC capacity 3.9% 4.4 3.6 0.7 2.9 2.9 0.0 14.5 | ||

| + | deploy 5% 5% 0.9% 1.0 10.0 2.0 0.5 0.5 0.5 14.5 | ||

| + | free 5.6 5.6 0.6% 0.7 6.9 1.5 0.5 0.5 0.4 10.5 | ||

| + | BEIJING-LCG2 pledge 53.6% 60.0 50.0 10.0 40.0 40.0 0.0 200.0 | ||

| + | deploy 12% 20% 4.5% 5.0 25.0 2.0 1.0 5.0 1.0 39.0 | ||

| + | free 13.4 22.4 4.4% 4.9 14.2 1.6 1.0 5.0 1.0 27.7 | ||

| + | GRIF pledge 182.4% 204.3 170.3 34.1 136.2 136.2 0.0 681.0 | ||

| + | deploy 74.1% 83.0 87.2 24.0 22.0 9.6 1.0 226.8 22.0 | ||

| + | free 66.0% 73.9 41.9 23.1 22.0 9.5 1.0 171.4 21.9 | ||

| + | GRIF-LAL deploy 45% 45% 8.9% 10.0 30.0 6.0 6.0 3.0 55.0 2.0 | ||

| + | free 50.4 50.4 2.5% 2.8 18.6 5.5 6.0 3.0 35.9 1.9 | ||

| + | GRIF-SACLAY deploy 30% 30% 3.6% 4.0 29.2 2.0 2.6 0.0 37.8 | ||

| + | free 33.6 33.6 3.6% 4.0 5.4 1.7 2.6 0.0 13.7 | ||

| + | GRIF-LPNHE deploy 25% 25% 61.6% 69.0 28.0 16.0 16.0 4.0 1.0 134.0 20.0 | ||

| + | free 28.0 28.0 59.9% 67.1 17.9 15.9 16.0 3.9 1.0 121.8 20.0 | ||

| + | TOKYO-LCG2 pledge 107.1% 120.0 100.0 20.0 80.0 80.0 0.0 400.0 | ||

| + | deploy 100% 100% 121.8% 136.4 109.1 13.6 9.1 9.1 9.1 286.4 9.1 | ||

| + | free 112.0 112.0 107.3% 120.2 17.6 11.0 9.1 8.5 9.0 175.4 2.6 | ||

| + | RO federation pledge 51.4% 57.6 48.0 9.6 38.4 38.4 0.0 192.0 | ||

| + | deploy 7.1% 8.0 20.0 8.0 6.0 10.0 7.9 59.9 0.0 | ||

| + | free 3.5% 3.9 4.2 7.0 6.0 10.0 7.9 39.0 0.0 | ||

| + | RO-02-NIPNE deploy 10% 10% 4.5% 5.0 11.0 6.0 3.0 5.0 3.0 33.0 | ||

| + | free 11.2 11.2 2.6% 2.9 4.0 5.2 3.0 5.0 3.0 23.1 | ||

| + | RO-07-NIPNE deploy 10% 10% 2.7% 3.0 9.0 2.0 3.0 5.0 4.9 26.9 | ||

| + | free 11.2 11.2 0.9% 1.0 0.2 1.8 3.0 5.0 4.9 15.9 | ||

| + | |||

| + | T2 total pledge 0.0 0.0 470.4% 526.8 439.0 87.8 351.2 351.2 0.0 1756.0 0.0 | ||

| + | deploy 307.0% 300.0% 302.8% 339.1 398.3 93.5 70.7 58.8 32.4 992.8 57.8 | ||

| + | free 343.8 336.0 260.1% 291.3 145.5 84.4 70.7 57.0 32.1 681.0 49.5 | ||

| + | </pre> | ||

| + | </ul> | ||

| + | |||

| + | |||

| + | <p> | ||

| + | 2009.05.18. | ||

| + | * pledged capacity [[http://lcg.web.cern.ch/LCG/Resources/WLCGResources-2008-2013-05FEB2009.pdf Tier 1 & Tier 2 Pledge and Resources table (v 18 March 2009)]] | ||

| + | * deployed and free space as of 2009 Mai 18 <br>[[Image:STEP09-FR-20090518a.PNG]] | ||

| + | * usage of MCDISK in STEP09 much smaller than that of DATADISK | ||

| + | * usage of MCDISK before STEP09 unknown | ||

| + | * usage of SCRATCHDISK (especially by user analysis jobs) unknown | ||

| + | * share according to http://atladcops.cern.ch:8000/drmon/ftmon_TiersInfo.html (assuming 10% per stream)<br>[[Image:STEP09-FR-share-20090518.PNG]] | ||

| + | |||

| + | |||

| + | * Automatic Query: SRM2.2 space token descriptions advertised in information system and associated ACLs[http://wn3.epcc.ed.ac.uk/srm/xml/srm_token_acls_table?token=ATLASSCRATCHDISK&vo=.*&site=.*&acl=.*] | ||

| + | |||

| + | |||

| + | Old calculation | ||

| + | |||

| + | * The table below shows spaces '''reserved/required'''. | ||

| + | ** The reserved spaces are obtained using srm commands. | ||

| + | ** The required spaces are obtained using the shares at ccrc08 and old requirements, thus they need to be revisited with the real size. | ||

| + | <ul> | ||

| + | <pre> | ||

| + | sizes in TB. | ||

| + | Site Share DATA MC PROD GROUP USER SCRATCH LOCALGROUP | ||

| + | IN2P3-CC 100% 222/ 427/ -/- 1.0/ 4.0 - 1.0 | ||

| + | IN2P3-LAPP 25% 6.8/ 14/15 6/2.6 2.0/7.5 0.5/ 1.0/ 3.6 | ||

| + | IN2P3-LPC 25% 5.0/ 11/15 3/2.4 2.0/7.5 3.0/ 3.0/ 1.0 | ||

| + | IN2P3-CPPM 5% 2.9/ 7.8/3 2.9/ 0.1/1.5 0.5/ - 0.1 | ||

| + | IN2P3-LPSC 5% 1.0/ 10/3 2/ 0.5/1.5 0.5/ 0.5/ --- | ||

| + | BEIJING-LCG2 20% 4.5/ 9.1/12 0.9/1.2 0.9/6 0.9/ - --- | ||

| + | GRIF-LAL 45% 6/ 15/27 6/ 6/13.5 2/ - 2.0 | ||

| + | GRIF-SACLAY 30% 4/ 13.8/18 2/ -/9 2/ 0.3/ --- | ||

| + | GRIF-LPNHE 25% 93/ 23/15 16/ 16/7.5 4/ 4/ 34.0 | ||

| + | TOKYO-LCG2 100% 136/ 82/60 5.5/6 9.1/30 9.1/ 9.1/ 9.1 | ||

| + | RO-02-NIPNE 10% 5/ 9/6 6/ 3/3 3/ - --- | ||

| + | RO-07-NIPNE 10% 3/ 9/6 2/ 3/3 5/ - --- | ||

| + | </pre> | ||

| + | </ul> | ||

| + | |||

| + | == ATLASUSERDISK deployment == | ||

| + | {| border="1" width="100%" | ||

| + | | SE | ||

| + | | owner | ||

| + | | group | ||

| + | | g: | ||

| + | | g:atlas | ||

| + | | g:atlas/ Role= production | ||

| + | | g:atlas/ Role= lcgadmin | ||

| + | | d:g: | ||

| + | | d:g:atlas | ||

| + | | d:g:atlas/ Role= production | ||

| + | | d:g:atlas/ Role= lcgadmin | ||

| + | | subdirectories | ||

| + | |----- | ||

| + | | clrlcgse01.in2p3.fr | ||

| + | | root | ||

| + | | atlas | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | SAM:ok, user08:ok, user08.EricLancon:?, user08.JohannesElmsheuser:? | ||

| + | |----- | ||

| + | | grid05.lal.in2p3.fr | ||

| + | | Stephane Jezequel | ||

| + | | atlas/Role=production | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | user:ok, user08:ok, user08.EricLancon:?, user08.FredericDerue:?, user08.JohannesElmsheuser:? | ||

| + | |----- | ||

| + | | node12.datagrid.cea.fr | ||

| + | | root | ||

| + | | root | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | none | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | none | ||

| + | | SAM:ok, user:ok, user08:ok, user08.EricLancon:?, user08.JohannesElmsheuser:ok | ||

| + | |----- | ||

| + | | lpnse1.in2p3.fr | ||

| + | | I Ueda | ||

| + | | atlas/Role=production | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | SAM:ok, user08:ok, user08.EricLancon:ok, user08.FredericDerue:ok, user08.JohannesElmsheuser: | ||

| + | |----- | ||

| + | | lapp-se01.in2p3.fr | ||

| + | | I Ueda | ||

| + | | atlas/Role=production | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | SAM:ok, users:ok, user08:ok, user08.EricLancon:?, user08.JohannesElmsheuser:? | ||

| + | |----- | ||

| + | | marsedpm.in2p3.fr | ||

| + | | I Ueda | ||

| + | | atlas/Role=production | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | no subdirectory | ||

| + | |----- | ||

| + | | lpsc-se-dpm-server.in2p3.fr | ||

| + | | Alessandro Di Girolamo | ||

| + | | atlas/Role=production | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | SAM:ok | ||

| + | |----- | ||

| + | | lcg-se01.icepp.jp | ||

| + | | I Ueda | ||

| + | | atlas/Role=production | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | not checked yet | ||

| + | |----- | ||

| + | | tbat05.nipne.ro | ||

| + | | root | ||

| + | | atlas/Role=production | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | SAM:ok, user08.EricLancon:? | ||

| + | |----- | ||

| + | | tbit00.nipne.ro | ||

| + | | Eric Lancon | ||

| + | | atlas/Role=pilot | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | user08.EricLancon:? | ||

| + | |} | ||

| + | |||

| + | |||

| + | == ATLASSCRATCHDISK deployment == | ||

| + | Requirements: [[#ATLASSCRATCHDISK]] | ||

| + | {| border="1" width="100%" | ||

| + | | SE | ||

| + | | Space Reservation | ||

| + | | owner | ||

| + | | group | ||

| + | | g: | ||

| + | | d:g: | ||

| + | | <span style="color:#FF0000;">comment</span> | ||

| + | |----- | ||

| + | | ccsrm.in2p3.fr | ||

| + | | <span style="color:#FF0000;">N/A</span> | ||

| + | |----- | ||

| + | | lapp-se01.in2p3.fr | ||

| + | | 1.0 TB | ||

| + | | I Ueda | ||

| + | | atlas/Role=production | ||

| + | | g::rwx<br>g:atlas:rwx<br>g:atlas/Role=lcgadmin:rwx<br>g:atlas/Role=production:rwx | ||

| + | | d:g::rwx<br>d:g:atlas:rwx<br>d:g:atlas/Role=lcgadmin:rwx<br>d:g:atlas/Role=production:rwx | ||

| + | | ok | ||

| + | |----- | ||

| + | | clrlcgse01.in2p3.fr | ||

| + | | 3.0 TB VO:atlas | ||

| + | | I Ueda | ||

| + | | atlas | ||

| + | | g::rwx<br>g:atlas:rwx<br>g:atlas/Role=production:rwx<br>g:atlas/Role=lcgadmin:rwx | ||

| + | | d:g::rwx<br>d:g:atlas:rwx<br>d:g:atlas/Role=production:rwx<br>d:g:atlas/Role=lcgadmin:rwx | ||

| + | | ok | ||

| + | |----- | ||

| + | | lpsc-se-dpm-server.in2p3.fr | ||

| + | | 0.5 TB VO:atlas | ||

| + | | I Ueda | ||

| + | | atlas/Role=production | ||

| + | | g::rwx<br>g:atlas:rwx<br>g:atlas/Role=lcgadmin:rwx<br>g:atlas/Role=production:rwx | ||

| + | | d:g::rwx<br>d:g:atlas:rwx<br>d:g:atlas/Role=lcgadmin:rwx<br>d:g:atlas/Role=production:rwx | ||

| + | | ok | ||

| + | |----- | ||

| + | | marsedpm.in2p3.fr | ||

| + | | 0.977 TB | ||

| + | | I Ueda | ||

| + | | atlas/Role=production | ||

| + | | g::rwx<br>g:atlas/Role=production:rwx<br>g:atlas/Role=lcgadmin:rwx<br>g:atlas:rwx | ||

| + | | d:g::rwx<br>d:g:atlas/Role=production:rwx<br>d:g:atlas/Role=lcgadmin:rwx<br>d:g:atlas:rwx | ||

| + | | ok | ||

| + | |----- | ||

| + | | grid05.lal.in2p3.fr | ||

| + | | 3.0 TB VO:atlas | ||

| + | | I Ueda | ||

| + | | atlas/Role=production | ||

| + | | g::rwx<br>g:atlas:rwx<br>g:atlas/Role=production:rwx<br>g:atlas/Role=lcgadmin:rwx | ||

| + | | d:g::rwx<br>d:g:atlas:rwx<br>d:g:atlas/Role=production:rwx<br>d:g:atlas/Role=lcgadmin:rwx | ||

| + | | ok | ||

| + | |----- | ||

| + | | node12.datagrid.cea.fr | ||

| + | | 0.3 TB | ||

| + | | root | ||

| + | | atlas/Role=production | ||

| + | | g::rwx<br>g:atlas:rwx<br>g:atlas/Role=production:rwx | ||

| + | | d:g::rwx<br>d:g:atlas:rwx<br>d:g:atlas/Role=production:rwx | ||

| + | | ok | ||

| + | |----- | ||

| + | | lpnse1.in2p3.fr | ||

| + | | 4.0 TB | ||

| + | | I Ueda | ||

| + | | atlas/Role=production | ||

| + | | g::rwx<br>g:atlas:rwx<br>g:atlas/Role=production:rwx<br>g:atlas/Role=lcgadmin:rwx | ||

| + | | d:g::rwx<br>d:g:atlas:rwx<br>d:g:atlas/Role=production:rwx<br>d:g:atlas/Role=lcgadmin:rwx | ||

| + | | ok | ||

| + | |----- | ||

| + | | lcg-se01.icepp.jp | ||

| + | | 9.1 TB VO:atlas | ||

| + | | root | ||

| + | | atlas | ||

| + | | g::rwx<br>g:atlas:rwx<br>g:atlas/Role=lcgadmin:rwx<br>g:atlas/Role=production:rwx | ||

| + | | d:g::rwx<br>d:g:atlas:rwx<br>d:g:atlas/Role=lcgadmin:rwx<br>d:g:atlas/Role=production:rwx | ||

| + | | ok | ||

| + | |----- | ||

| + | | ccsrm.ihep.ac.cn | ||

| + | | 5.0 TB | ||

| + | | root | ||

| + | | root | ||

| + | | g::rwx<br>g:atlas:rwx<br>g:atlas/Role=lcgadmin:rwx<br>g:atlas/Role=production:rwx | ||

| + | | d:g::rwx<br>d:g:atlas:rwx<br>d:g:atlas/Role=lcgadmin:rwx<br>d:g:atlas/Role=production:rwx | ||

| + | | ok | ||

| + | |----- | ||

| + | | tbit00.nipne.ro | ||

| + | | 5.0 TB <span style="color:#FF0000;">VOMS:/atlas/Role=production</span> | ||

| + | | root | ||

| + | | root | ||

| + | | g::rwx<br>g:atlas:rwx<br>g:atlas/Role=lcgadmin:rwx<br>g:atlas/Role=production:rwx | ||

| + | | d:g::rwx<br>d:g:atlas:rwx<br>d:g:atlas/Role=lcgadmin:rwx<br>d:g:atlas/Role=production:rwx | ||

| + | | <span style="color:#FF0000;">Need to correct the group for the space token</span> | ||

| + | |----- | ||

| + | | tbat05.nipne.ro | ||

| + | | 5.0 TB <span style="color:#FF0000;">VOMS:/atlas/Role=production</span> | ||

| + | | root | ||

| + | | root | ||

| + | | g::rwx<br>g:atlas:rwx<br>g:atlas/Role=lcgadmin:rwx<br>g:atlas/Role=production:rwx | ||

| + | | d:g::rwx<br>d:g:atlas:rwx<br>d:g:atlas/Role=lcgadmin:rwx<br>d:g:atlas/Role=production:rwx | ||

| + | | <span style="color:#FF0000;">Need to correct the group for the space token</span> | ||

| + | |} | ||

| + | |||

| + | == ATLASLOCALGROUPDISK deployment == | ||

| + | {| border="1" width="100%" | ||

| + | | SE | ||

| + | | owner | ||

| + | | group | ||

| + | | g: | ||

| + | | g:atlas | ||

| + | | g:atlas/ <locality> | ||

| + | | g:atlas/ Role= production | ||

| + | | g:atlas/ Role= lcgadmin | ||

| + | | d:g | ||

| + | | d:g:atlas | ||

| + | | d:g:atlas/<br><locality> | ||

| + | | d:g:atlas/<br>Role= production | ||

| + | | d:g:atlas/<br>Role= lcgadmin | ||

| + | | subdirectories | ||

| + | |----- | ||

| + | | clrlcgse01.in2p3.fr | ||

| + | | root | ||

| + | | root | ||

| + | | rwx | ||

| + | | none | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | none | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | none | ||

| + | |----- | ||

| + | | grid05.lal.in2p3.fr | ||

| + | | Stephane Jezequel | ||

| + | | atlas/fr | ||

| + | | rwx | ||

| + | | r-x | ||

| + | | none | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | <span style="color:#0000FF;">'''r-x'''</span> | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | no subdirectory, 1 file (test1 by Stephane) | ||

| + | |----- | ||

| + | | node12.datagrid.cea.fr | ||

| + | | root | ||

| + | | root | ||

| + | | rwx | ||

| + | | none | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | none | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | <span style="color:#FF0000;">jerome:NG</span> | ||

| + | |----- | ||

| + | | lpnse1.in2p3.fr | ||

| + | | root | ||

| + | | atlas/ Role=production | ||

| + | | rwx | ||

| + | | none | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | none | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | none | ||

| + | |----- | ||

| + | | lapp-se01.in2p3.fr | ||

| + | | root | ||

| + | | atlas/ Role=production | ||

| + | | r-x | ||

| + | | none | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | none | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | SAM:ok | ||

| + | |----- | ||

| + | | marsedpm.in2p3.fr | ||

| + | |----- | ||

| + | | lpsc-se-dpm-server.in2p3.fr | ||

| + | |----- | ||

| + | | lcg-se01.icepp.jp | ||

| + | | root | ||

| + | | atlas/jp | ||

| + | | rwx | ||

| + | | none | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | <span style="color:#0000FF;">'''r-x'''</span> | ||

| + | | none | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | rwx | ||

| + | | SAM:ok, data08_cos:?, fdr08_run2:? | ||

| + | |----- | ||

| + | | tbat05.nipne.ro | ||

| + | |----- | ||

| + | | tbit00.nipne.ro | ||

| + | |} | ||

Latest revision as of 09:37, 5 août 2009

Introduction

Sources of information:

- Now ATLAS has prepared a page https://twiki.cern.ch/twiki/bin/view/Atlas/StorageSetUp

- https://twiki.cern.ch/twiki/bin/view/Atlas/Step09

- Presentations at GDB (13 May 2009) and Réunion LCG-France (18-19 May 2009)

- Presentations at ATLAS Tier-1/2/3 Jamboree (28 August 2008) [1]

Some comments about the contents

- Information only for T2s (and T3s).

- ACL information only for DPM for the moment.

- See the slides

- The requirements for ATLASGROUPDISK is temporary due to a technical limitation in DPM. Atlas has contacted DPM developpers. The proper implementation is expected to be available in September.

- Site admin's are requested to create at least the space tokens. The namespace directories can be left to atlas production if the top directory is properly set up so that

/atlas/Role=productionis allowed to execute dpns-mkdir and dpns-setacl remotely. (Of course they are welcome to create them by themselves).

- In general read permission should be given to all ATLAS users everywhere.

- In general write permission should be given to

/atlas/Role=productioneverywhere, so that the ATLAS central deletion tool can work. - It is suggested at LAPP to give write permission to

atlas/Role=lcgadmineverywhere, so that the ATLAS admin can control the storage. (less people inRole=lcgadminthan in than in/atlas/Role=production). Stephane will discuss this issue with ATLAS.

Space Requirements

ATLAS top directory

- On this page the top directory for ATLAS is represented by

.../atlas. Each site should replace it according to their SE configuration.- eg.

lapp-se01.in2p3.fr:/dpm/in2p3.fr/home/atlasfor lapp.

- eg.

- Although it is up to sites policies, but it is recommended

- to have this top directory configured so that only /atlas/Role=production and /atlas/Role=lcgadmin can create files/directories underneath and ordinary users cannot.

Summary table for Space token and Name space

- The size in < > is for a typical T2 with ~500 CPUs and ~100 TB disk

| space token | voms group to be associated | space to be reserved | namespace directory(-ies) to be created |

| ATLASDATADISK | /atlas/Role=production

|

not known yet see below* |

.../atlas/atlasdatadisk

write permission only to |

| ATLASMCDISK | /atlas/Role=production

|

a share of 60TB (plus possible extra) see below* |

.../atlas/atlasmcdisk write permission only to atlas/Role=production

|

| ATLASPRODDISK | /atlas/Role=production

|

<2TB>, (or 6GB/kSI2K) (see below*) |

.../atlas/atlasproddisk write permission only to /atlas/Role=production

|

| ATLASGROUPDISK | /atlas (temporary solution. see below*)

|

a share of 30TB (see below*) |

.../atlas/atlasgroupdisk write permission to /atlas/Role=production

|

| ATLASUSERDISK (Atlas StorageSetUp) |

/atlas

|

<5TB> (see below*) |

.../atlas/atlasuserdisk write permission to all ATLAS users write permission to /atlas/Role=production for central deletion the same for all the subdirectories |

| ATLASSCRATCHDISK (Atlas StorageSetUp) |

/atlas

|

<5TB> (see below*) |

.../atlas/atlasscratchdisk write permission to all ATLAS users write permission to /atlas/Role=production for central deletion the same for all the subdirectories |

| ATLASLOCALGROUPDISK (Atlas StorageSetUp) |

/atlas/<locality>, <locality>=fr,ro,cn,jp,.../atlas

|

sites to decide | .../atlas/atlaslocalgroupdisk write permission to local ATLAS users ( /atlas/<locality>)write permission to /atlas/Role=production for central deletion

|

- A T3 will need ATLASDATADISK if it would like to receive real data, ATLASMCDISK to received simulated data, ATLASGROUPDISK to receive group analysis data, and ATLASPRODDISK to contribute official production. ATLASUSERDISK is not necessary, but may be needed if the site contributes non-local user analysis.

- The spacetokens ATLASGRP<GROUP> (eg. ATLASGRPTOP), which were requested for ccrc08/fdr, are not required any longer, being repleaced with a single spacetoken ATLASGROUPDISK. There should not be a file using this spacetoken. They can be removced.

- The spacetoken ATLASENDUSER, which was requested for ccrc08/fdr, is not required any longer, being replaced with ATLASUSERDISK. It can be removed.

STEP09 requirements

presentation at [13 May 2009]

- Total data export T1-T2(for 100%): 112 TB, 92.6MB/s

| T0 AOD+DPD (0.2MB/event each) | reprocessing AOD+DPD | data total | MC prod | MC repro | MC input for analysis | output of analysis | comment | |

| 200Hz trigger | 46.3MB/s (average) | 46.3MB/s | 92.6MB/s | |||||

| 50ksec /day | 4TB/day | 4TB/day | 8TB/day | |||||

| STEP09 (2 weeks) | 56TB | 56TB | 112TB | 3.5TB | 3.5TB | 50TB |

- T2 Space Token Summary

| space token | space fraction |

| ATLASDATADISK | 30% |

| ATLASMCDISK | 25% |

| ATLASPRODDISK | 5% |

| ATLASGROUPDISK | 20% |

| ATLASSCRATCHDISK | 20% |

| ATLASLOCALGROUPDISK | no requirement |

ATLASDATADISK

- Total estimation of size comes later

Reprocessing Aug 09 ESD 83TB, AOD 7TB, DPD 13TB, HIST/NTUP/LOG: 5TB, TOTAL 108TB Fast reprocessing of Cosmic Jun09 ESD 24.5TB AOD 1.8TB DPD 6.2TB Cosmic Jun 09 ~300 TB RAW ~100 TB ESD, AOD, DPD - From first two days, no DPD merging: Type #files avg size [MB] total x7 ------------------------------------ RAW 13278 2020 25.6 TB 180 TB ESD 10211 240 2.3 TB 16 TB AOD 586 206 0.12 TB 0.8 TB DPD 14017 57 0.76 TB 5.3 TB Spring09 reprocessing ESD 83TB

- Reprocessing Aug09 (30 July 2009) http://indico.cern.ch/materialDisplay.py?contribId=5&materialId=slides&confId=65114

- Cosmic Jun09 http://indico.cern.ch/getFile.py/access?contribId=8&resId=0&materialId=1&confId=62496

fast reprocessing of june cosmic data 57 runs, 338 datasets, 145660 files, 295.4TB

https://twiki.cern.ch/twiki/bin/view/Atlas/DataPreparationReprocessing#Fast_reprocessing_of_June_July_2 http://indico.cern.ch/materialDisplay.py?contribId=4&materialId=slides&confId=64069

- VOMS group associated with the space: /atlas/Role=production

- Namespace directory to be created: $DPNS_HOME/atlas/atlasdatadisk

- Normally, sites have already this namespace created.

- Namespace ACL: writable by only atlas/Role=production, readable by all ATLAS users

# group: atlas/Role=production user::rwx group::rwx #effective:rwx group:atlas/Role=production:rwx #effective:rwx mask::rwx other::r-x default:user::rwx default:group::rwx default:group:atlas/Role=production:rwx default:mask::rwx default:other::r-x

ATLASMCDISK

This is the space where simulated data produced centrally go in.

- 15TB for a typical T2 with ~500 CPU?fs and ~100 TB disk

- 60TB for a T2 requesting for 100% AOD.

- The size will increase in case D1PD are requested by the site. (100% D1PD will be 30TB)

- The required space is 1/3 of the above for the period until Sep 2008, 2/3 for Sep - Dec 2008, and full for Jan - Mar 2009.

- using the share at ccrc08, the required size for each FR sites are;

Required Space at Site Share Jul08 Sep08 Dec08 Possibly with D1PD IN2P3-LAPP 25% 5 TB 10 TB 15 TB 22.5 TB IN2P3-CPPM 5% 1 TB 2 TB 3 TB 4.5 TB IN2P3-LPSC 5% 1 TB 2 TB 3 TB 4.5 TB IN2P3-LPC 25% 5 TB 10 TB 15 TB 22.5 TB BEIJING-LCG2 20% 4 TB 8 TB 12 TB 18 TB RO-07-NIPNE 10% 2 TB 4 TB 6 TB 9 TB RO-02-NIPNE 10% 2 TB 4 TB 6 TB 9 TB GRIF-LAL 45% 9 TB 18 TB 27 TB 40.5 TB GRIF-LPNHE 25% 5 TB 10 TB 15 TB 22.5 TB GRIF-SACLAY 30% 6 TB 12 TB 18 TB 27 TB TOKYO-LCG2 100% 20 TB 40 TB 60 TB 90 TB

- VOMS group associated with the space: /atlas/Role=production

- Namespace directory to be created:

$DPNS_HOME/atlas/atlasmcdisk- Normally, sites have already this namespace created.

- Namespace ACL: writable by only atlas/Role=production, readable by all ATLAS users

# group: atlas/Role=production user::rwx group::rwx #effective:r-x group:atlas/Role=production:rwx #effective:rwx mask::rwx other::r-x default:user::rwx default:group::rwx default:group:atlas/Role=production:rwx default:mask::rwx default:other::r-x

ATLASPRODDISK

This is the space used by the ATLAS production system (panda) for putting input files for the jobs to run on the site, downloading them from T1, and output files from the jobs which are to be replicated to the T1. Both input and output files are to be deleted centrally after a certain period.

- 2TB for a typical T2 with ~500 CPUs (the size is to be re-visited)

- scales with the CPU capacity of the site.

- will be larger if the reconstruction jobs run on the site.

- My private estimation is 6GB/kSI2K (400kSI2K/event for simulation, 4MB/HITS, 7days to keep).

- Using the MoU value, the required space for each site is;

Site kSI2K Space BEIJING 200 1.2 TB GRIF 800 4.8 TB (federation) LAPP 440 2.6 TB LPC 400 2.4 TB TOKYO 1000 6 TB RO 400 2.4 TB (federation)

- VOMS group associated with the space: /atlas/Role=production

- Namespace directory to be created: $DPNS_HOME/atlas/atlasproddisk

- Namespace ACL: writable by only atlas/Role=production, readable by all ATLAS users

# group: atlas/Role=production user::rwx group::rwx #effective:rwx group:atlas/Role=production:rwx #effective:rwx mask::rwx other::r-x default:user::rwx default:group::rwx default:group:atlas/Role=production:rwx default:mask::rwx default:other::r-x

ATLASGROUPDISK

This is the space to put group DPD files, which are not created by the central production system, but by group analyses with the group production roles.

- 6TB for a typical T2 with ~500 CPUs and ~100 TB disk

- 100% D2PD will be 30TB

- The required space is 1/3 of the above for the period until Sep 2008, 2/3 for Sep - Dec 2008, and full for Jan - Mar 2009.

- using the share at ccrc08, the required size for each FR sites are;

Required Space by Site Share Jul08 Sep08 Dec08 IN2P3-LAPP 25% 2.5 TB 5 TB 7.5 TB IN2P3-CPPM 5% 0.5 TB 1 TB 1.5 TB IN2P3-LPSC 5% 0.5 TB 1 TB 1.5 TB IN2P3-LPC 25% 2.5 TB 5 TB 7.5 TB BEIJING-LCG2 20% 2 TB 4 TB 6 TB RO-07-NIPNE 10% 1 TB 2 TB 3 TB RO-02-NIPNE 10% 1 TB 2 TB 3 TB GRIF-LAL 45% 4.5 TB 9 TB 13.5 TB GRIF-LPNHE 25% 2.5 TB 5 TB 7.5 TB GRIF-SACLAY 30% 3 TB 6 TB 9 TB TOKYO-LCG2 100% 10 TB 20 TB 30 TB

- One single space to be reserved for all the group activities ($GROUP = phys-beauty, phys-exotics, phys-gener, phys-hi, phys-higgs, phys-lumin, phys-sm, phys-susy, phys-top, perf-egamma, perf-flavtag, perf-jets, perf-muons, perf-tau, etc.)

- VOMS group associated with the space:

/atlas/atlas/Role=productionA temporary solution until multiple group support to the spaces is available.Once it is available, the groups will be /atlas/Role=production and /atlas/$GROUP/Role=production for all $GROUP

- Namespaces directory to be created and their ACLs:

- $DPNS_HOME/atlas/atlasgroupdisk: writable by only atlas/Role=production, readable by all ATLAS users

$DPNS_HOME/atlas/atlasgroupdisk/$GROUP: writable by atlas/Role=production and /atlas/$GROUP/Role=production, readable by all ATLAS users- eg. for phys-higgs

# group: atlas/Role=production user::rwx group::rwx #effective:rwx group:atlas/Role=production:rwx #effective:rwx mask::rwx other::r-x default:user::rwx default:group::rwx default:group:atlas/Role=production:rwx default:mask::rwx default:other::r-x

ATLASUSERDISK

This space has been decomissioned and replaced with ATLASSCRATCHDISK. This is the scratch space for users to put the output of their analysis jobs run at the site before copying them to their final destination. The files are to be deleted by central operation after a certain period of time.

- 5TB for a typical T2 with ~500 CPUs and ~100 TB disk

- VOMS group associated with the space: /atlas

- Namespace directory to be created: .../atlas/atlasuserdisk/ (the old .../atlas/user is to be used for files without spacetoken)

- Namespace ACL: writable by all ATLAS users (group /atlas) and /atlas/Role=production (for central deletion).

The user directories underneath should be writable only by the owner and /atlas/Role=production (for central deletion). all the subdirectories should follow this. - Normally, sites have already this namespace created by user analysis jobs so far.

- example commands

dpns-entergrpmap --group "atlas"dpns-entergrpmap --group "atlas/Role=production"dpns-mkdir $DPNS_HOME/atlas/atlasuserdiskdpns-mkdir $DPNS_HOME/atlas/atlasuserdiskdpns-setacl -m g:atlas:rwx,m:rwx,d:g:atlas:r-x,d:m:rwx $DPNS_HOME/atlas/atlasuserdiskdpns-setacl -m g:atlas/Role=production:rwx,m:rwx,d:g:atlas/Role=production:rwx,d:m:rwx $DPNS_HOME/atlas/atlasuserdiskdpns-getacl $DPNS_HOME/atlas/atlasuserdisk

# group: atlas user::rwx group::rwx group:atlas:rwx group:atlas/Role=lcgadmin:rwx group:atlas/Role=production:rwx mask::rwx other::r-x default:user::rwx default:group::rwx default:group:atlas:rwx default:group:atlas/Role=lcgadmin:rwx default:group:atlas/Role=production:rwx default:mask::rwx default:other::r-x

ATLASSCRATCHDISK

This space is to be deployed to replace ATLASUSERDISK. During the migraiton period, the both spaces should be available. The size of the space is preferably the same as ATLASUSERDISK, but it is also possible to start with a small size and increase it while monitoring the usage and decreasing ATLASUSERDISK.

This is the scratch space for users to put the output of their analysis jobs run at the site before copying them to their final destination. The files are to be deleted by central operation after a certain period of time.

- 5TB for a typical T2 with ~500 CPUs and ~100 TB disk

- VOMS group associated with the space: /atlas

- Namespace directory to be created: .../atlas/atlasscratchdisk/

- Namespace ACL: writable by all ATLAS users (group /atlas) and /atlas/Role=production (for central deletion). all the subdirectories should follow this.

- Normally, sites have already this namespace created by user analysis jobs so far.

- example commands

dpns-entergrpmap --group "atlas"dpns-entergrpmap --group "atlas/Role=production"dpns-mkdir $DPNS_HOME/atlas/atlasscratchdiskdpns-mkdir $DPNS_HOME/atlas/atlasscratchdiskdpns-setacl -m g:atlas:rwx,m:rwx,d:g:atlas:r-x,d:m:rwx $DPNS_HOME/atlas/atlasscratchdiskdpns-setacl -m g:atlas/Role=production:rwx,m:rwx,d:g:atlas/Role=production:rwx,d:m:rwx $DPNS_HOME/atlas/atlasscratchdiskdpns-getacl $DPNS_HOME/atlas/atlasscratchdisk

# group: atlas user::rwx group::rwx group:atlas:rwx group:atlas/Role=lcgadmin:rwx group:atlas/Role=production:rwx mask::rwx other::r-x default:user::rwx default:group::rwx default:group:atlas:rwx default:group:atlas/Role=lcgadmin:rwx default:group:atlas/Role=production:rwx default:mask::rwx default:other::r-x

ATLASLOCALGROUPDISK

This is a space for "local" users of the site, and is not included in the pledged resources to ATLAS.

- See the Atlas StorageSetUp wiki page.

- size to be decided by sites.

- the resources not included in the pledge.

- VOMS group associated with the space:

/atlas/fr (or /atlas/ro, /atlas/cn, /atlas/jp correspondingly)/atlas - name space: $DPNS_HOME/atlas/atlaslocalgroupdisk (the path previously required .../atlas/fr/user (or .../atlas/ro/user, .../atlas/cn/user, .../atlas/jp/user, etc.) is to be used for files without space token)

- ACL: write permission

onlyto /atlas/fr group (or /atlas/ro, /atlas/cn, /atlas/jp correspondingly) and /atlas/Role=production - example ACL:

# group: atlas/fr user::rwx group::rwx group:atlas/Role=lcgadmin:rwx group:atlas/Role=production:rwx group:atlas/fr:rwx #effective:rwx mask::rwx other::r-x default:user::rwx default:group::r-x default:group:atlas:r-x default:group:atlas/Role=lcgadmin:rwx default:group:atlas/Role=production:rwx default:group:atlas/fr:rwx default:mask::rwx default:other::r-x

Deployment

Space Reservation deployment

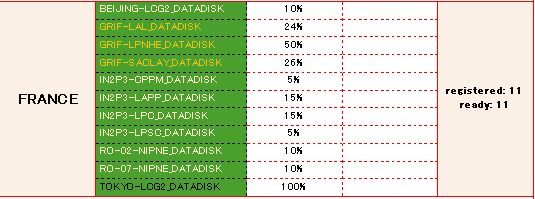

- The share used for STEP09 http://atladcops.cern.ch:8000/drmon/ftmon_TiersInfo.html

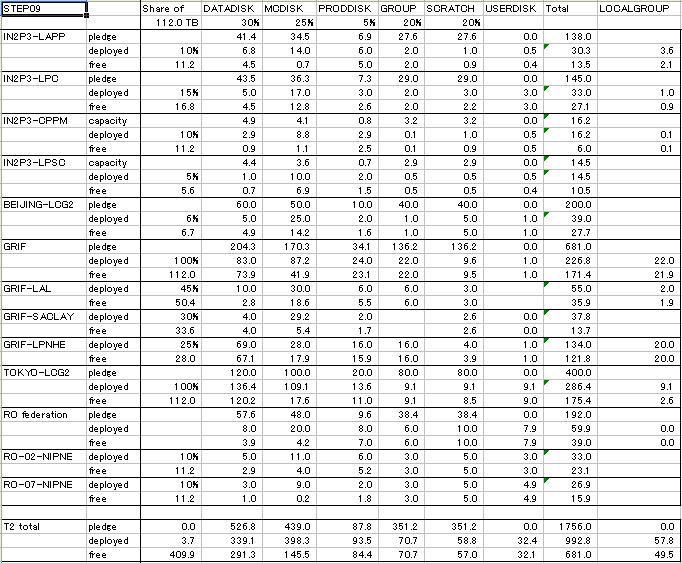

2009.05.19. (the xls file used to create this table File:STEP09-FR.xls File:STEP09-FR-T2-Disk.xls)

- pledged capacity [Tier 1 & Tier 2 Pledge and Resources table (v 18 March 2009)]

- shares and other assumptions same as the calculation 2009.05.18.

STEP09 Share CCRC space DATA MCDISK PROD GROUP SCRATCH USER Total LOCALGROUP 112TB 112TB /112TB 30% 25% 5% 20% 20% IN2P3-CC pledge 0.0 deploy 212.0 454.7 11.0 2.7 4.0 684.4 1.0 free 71.8 78.1 5.7 2.6 2.1 160.3 0.7 IN2P3-LAPP pledge 37.0% 41.4 34.5 6.9 27.6 27.6 0.0 138.0 deploy 20% 25% 6.1% 6.8 14.0 6.0 2.0 1.0 0.5 30.3 3.6 free 22.4 28.0 4.0% 4.5 0.7 5.0 2.0 0.9 0.4 13.5 2.1 IN2P3-LPC pledge 38.8% 43.5 36.3 7.3 29.0 29.0 0.0 145.0 deploy 30% 25% 4.5% 5.0 17.0 3.0 2.0 3.0 3.0 33.0 1.0 free 33.6 28.0 4.0% 4.5 12.8 2.6 2.0 2.2 3.0 27.1 0.9 IN2P3-CPPM capacity 4.3% 4.9 4.1 0.8 3.2 3.2 0.0 16.2 deploy 20% 5% 2.6% 2.9 8.8 2.9 0.1 1.0 0.5 16.2 0.1 free 22.4 5.6 0.8% 0.9 1.1 2.5 0.1 0.9 0.5 6.0 0.1 IN2P3-LPSC capacity 3.9% 4.4 3.6 0.7 2.9 2.9 0.0 14.5 deploy 5% 5% 0.9% 1.0 10.0 2.0 0.5 0.5 0.5 14.5 free 5.6 5.6 0.6% 0.7 6.9 1.5 0.5 0.5 0.4 10.5 BEIJING-LCG2 pledge 53.6% 60.0 50.0 10.0 40.0 40.0 0.0 200.0 deploy 12% 20% 4.5% 5.0 25.0 2.0 1.0 5.0 1.0 39.0 free 13.4 22.4 4.4% 4.9 14.2 1.6 1.0 5.0 1.0 27.7 GRIF pledge 182.4% 204.3 170.3 34.1 136.2 136.2 0.0 681.0 deploy 74.1% 83.0 87.2 24.0 22.0 9.6 1.0 226.8 22.0 free 66.0% 73.9 41.9 23.1 22.0 9.5 1.0 171.4 21.9 GRIF-LAL deploy 45% 45% 8.9% 10.0 30.0 6.0 6.0 3.0 55.0 2.0 free 50.4 50.4 2.5% 2.8 18.6 5.5 6.0 3.0 35.9 1.9 GRIF-SACLAY deploy 30% 30% 3.6% 4.0 29.2 2.0 2.6 0.0 37.8 free 33.6 33.6 3.6% 4.0 5.4 1.7 2.6 0.0 13.7 GRIF-LPNHE deploy 25% 25% 61.6% 69.0 28.0 16.0 16.0 4.0 1.0 134.0 20.0 free 28.0 28.0 59.9% 67.1 17.9 15.9 16.0 3.9 1.0 121.8 20.0 TOKYO-LCG2 pledge 107.1% 120.0 100.0 20.0 80.0 80.0 0.0 400.0 deploy 100% 100% 121.8% 136.4 109.1 13.6 9.1 9.1 9.1 286.4 9.1 free 112.0 112.0 107.3% 120.2 17.6 11.0 9.1 8.5 9.0 175.4 2.6 RO federation pledge 51.4% 57.6 48.0 9.6 38.4 38.4 0.0 192.0 deploy 7.1% 8.0 20.0 8.0 6.0 10.0 7.9 59.9 0.0 free 3.5% 3.9 4.2 7.0 6.0 10.0 7.9 39.0 0.0 RO-02-NIPNE deploy 10% 10% 4.5% 5.0 11.0 6.0 3.0 5.0 3.0 33.0 free 11.2 11.2 2.6% 2.9 4.0 5.2 3.0 5.0 3.0 23.1 RO-07-NIPNE deploy 10% 10% 2.7% 3.0 9.0 2.0 3.0 5.0 4.9 26.9 free 11.2 11.2 0.9% 1.0 0.2 1.8 3.0 5.0 4.9 15.9 T2 total pledge 0.0 0.0 470.4% 526.8 439.0 87.8 351.2 351.2 0.0 1756.0 0.0 deploy 307.0% 300.0% 302.8% 339.1 398.3 93.5 70.7 58.8 32.4 992.8 57.8 free 343.8 336.0 260.1% 291.3 145.5 84.4 70.7 57.0 32.1 681.0 49.5

2009.05.18.

- pledged capacity [Tier 1 & Tier 2 Pledge and Resources table (v 18 March 2009)]

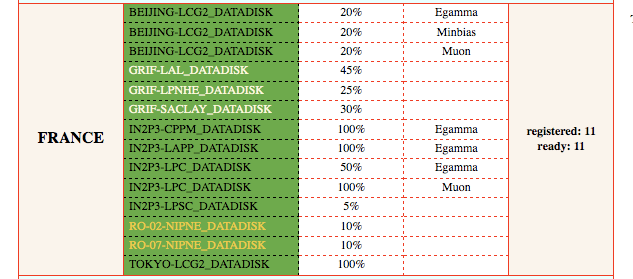

- deployed and free space as of 2009 Mai 18

- usage of MCDISK in STEP09 much smaller than that of DATADISK

- usage of MCDISK before STEP09 unknown

- usage of SCRATCHDISK (especially by user analysis jobs) unknown

- share according to http://atladcops.cern.ch:8000/drmon/ftmon_TiersInfo.html (assuming 10% per stream)

- Automatic Query: SRM2.2 space token descriptions advertised in information system and associated ACLs[4]

Old calculation

- The table below shows spaces reserved/required.

- The reserved spaces are obtained using srm commands.

- The required spaces are obtained using the shares at ccrc08 and old requirements, thus they need to be revisited with the real size.

sizes in TB. Site Share DATA MC PROD GROUP USER SCRATCH LOCALGROUP IN2P3-CC 100% 222/ 427/ -/- 1.0/ 4.0 - 1.0 IN2P3-LAPP 25% 6.8/ 14/15 6/2.6 2.0/7.5 0.5/ 1.0/ 3.6 IN2P3-LPC 25% 5.0/ 11/15 3/2.4 2.0/7.5 3.0/ 3.0/ 1.0 IN2P3-CPPM 5% 2.9/ 7.8/3 2.9/ 0.1/1.5 0.5/ - 0.1 IN2P3-LPSC 5% 1.0/ 10/3 2/ 0.5/1.5 0.5/ 0.5/ --- BEIJING-LCG2 20% 4.5/ 9.1/12 0.9/1.2 0.9/6 0.9/ - --- GRIF-LAL 45% 6/ 15/27 6/ 6/13.5 2/ - 2.0 GRIF-SACLAY 30% 4/ 13.8/18 2/ -/9 2/ 0.3/ --- GRIF-LPNHE 25% 93/ 23/15 16/ 16/7.5 4/ 4/ 34.0 TOKYO-LCG2 100% 136/ 82/60 5.5/6 9.1/30 9.1/ 9.1/ 9.1 RO-02-NIPNE 10% 5/ 9/6 6/ 3/3 3/ - --- RO-07-NIPNE 10% 3/ 9/6 2/ 3/3 5/ - ---

ATLASUSERDISK deployment

| SE | owner | group | g: | g:atlas | g:atlas/ Role= production | g:atlas/ Role= lcgadmin | d:g: | d:g:atlas | d:g:atlas/ Role= production | d:g:atlas/ Role= lcgadmin | subdirectories |

| clrlcgse01.in2p3.fr | root | atlas | rwx | rwx | rwx | rwx | rwx | rwx | rwx | rwx | SAM:ok, user08:ok, user08.EricLancon:?, user08.JohannesElmsheuser:? |

| grid05.lal.in2p3.fr | Stephane Jezequel | atlas/Role=production | rwx | rwx | rwx | rwx | rwx | rwx | rwx | rwx | user:ok, user08:ok, user08.EricLancon:?, user08.FredericDerue:?, user08.JohannesElmsheuser:? |

| node12.datagrid.cea.fr | root | root | rwx | rwx | rwx | none | rwx | rwx | rwx | none | SAM:ok, user:ok, user08:ok, user08.EricLancon:?, user08.JohannesElmsheuser:ok |

| lpnse1.in2p3.fr | I Ueda | atlas/Role=production | rwx | rwx | rwx | rwx | rwx | rwx | rwx | rwx | SAM:ok, user08:ok, user08.EricLancon:ok, user08.FredericDerue:ok, user08.JohannesElmsheuser: |

| lapp-se01.in2p3.fr | I Ueda | atlas/Role=production | rwx | rwx | rwx | rwx | rwx | rwx | rwx | rwx | SAM:ok, users:ok, user08:ok, user08.EricLancon:?, user08.JohannesElmsheuser:? |

| marsedpm.in2p3.fr | I Ueda | atlas/Role=production | rwx | rwx | rwx | rwx | rwx | rwx | rwx | rwx | no subdirectory |

| lpsc-se-dpm-server.in2p3.fr | Alessandro Di Girolamo | atlas/Role=production | rwx | rwx | rwx | rwx | rwx | rwx | rwx | rwx | SAM:ok |

| lcg-se01.icepp.jp | I Ueda | atlas/Role=production | rwx | rwx | rwx | rwx | rwx | rwx | rwx | rwx | not checked yet |

| tbat05.nipne.ro | root | atlas/Role=production | rwx | rwx | rwx | rwx | rwx | rwx | rwx | rwx | SAM:ok, user08.EricLancon:? |

| tbit00.nipne.ro | Eric Lancon | atlas/Role=pilot | rwx | rwx | rwx | rwx | rwx | rwx | rwx | rwx | user08.EricLancon:? |

ATLASSCRATCHDISK deployment

Requirements: #ATLASSCRATCHDISK

| SE | Space Reservation | owner | group | g: | d:g: | comment |

| ccsrm.in2p3.fr | N/A | |||||

| lapp-se01.in2p3.fr | 1.0 TB | I Ueda | atlas/Role=production | g::rwx g:atlas:rwx g:atlas/Role=lcgadmin:rwx g:atlas/Role=production:rwx |

d:g::rwx d:g:atlas:rwx d:g:atlas/Role=lcgadmin:rwx d:g:atlas/Role=production:rwx |

ok |

| clrlcgse01.in2p3.fr | 3.0 TB VO:atlas | I Ueda | atlas | g::rwx g:atlas:rwx g:atlas/Role=production:rwx g:atlas/Role=lcgadmin:rwx |

d:g::rwx d:g:atlas:rwx d:g:atlas/Role=production:rwx d:g:atlas/Role=lcgadmin:rwx |

ok |

| lpsc-se-dpm-server.in2p3.fr | 0.5 TB VO:atlas | I Ueda | atlas/Role=production | g::rwx g:atlas:rwx g:atlas/Role=lcgadmin:rwx g:atlas/Role=production:rwx |

d:g::rwx d:g:atlas:rwx d:g:atlas/Role=lcgadmin:rwx d:g:atlas/Role=production:rwx |

ok |

| marsedpm.in2p3.fr | 0.977 TB | I Ueda | atlas/Role=production | g::rwx g:atlas/Role=production:rwx g:atlas/Role=lcgadmin:rwx g:atlas:rwx |

d:g::rwx d:g:atlas/Role=production:rwx d:g:atlas/Role=lcgadmin:rwx d:g:atlas:rwx |

ok |

| grid05.lal.in2p3.fr | 3.0 TB VO:atlas | I Ueda | atlas/Role=production | g::rwx g:atlas:rwx g:atlas/Role=production:rwx g:atlas/Role=lcgadmin:rwx |

d:g::rwx d:g:atlas:rwx d:g:atlas/Role=production:rwx d:g:atlas/Role=lcgadmin:rwx |

ok |

| node12.datagrid.cea.fr | 0.3 TB | root | atlas/Role=production | g::rwx g:atlas:rwx g:atlas/Role=production:rwx |

d:g::rwx d:g:atlas:rwx d:g:atlas/Role=production:rwx |

ok |

| lpnse1.in2p3.fr | 4.0 TB | I Ueda | atlas/Role=production | g::rwx g:atlas:rwx g:atlas/Role=production:rwx g:atlas/Role=lcgadmin:rwx |

d:g::rwx d:g:atlas:rwx d:g:atlas/Role=production:rwx d:g:atlas/Role=lcgadmin:rwx |

ok |

| lcg-se01.icepp.jp | 9.1 TB VO:atlas | root | atlas | g::rwx g:atlas:rwx g:atlas/Role=lcgadmin:rwx g:atlas/Role=production:rwx |

d:g::rwx d:g:atlas:rwx d:g:atlas/Role=lcgadmin:rwx d:g:atlas/Role=production:rwx |

ok |

| ccsrm.ihep.ac.cn | 5.0 TB | root | root | g::rwx g:atlas:rwx g:atlas/Role=lcgadmin:rwx g:atlas/Role=production:rwx |

d:g::rwx d:g:atlas:rwx d:g:atlas/Role=lcgadmin:rwx d:g:atlas/Role=production:rwx |

ok |

| tbit00.nipne.ro | 5.0 TB VOMS:/atlas/Role=production | root | root | g::rwx g:atlas:rwx g:atlas/Role=lcgadmin:rwx g:atlas/Role=production:rwx |

d:g::rwx d:g:atlas:rwx d:g:atlas/Role=lcgadmin:rwx d:g:atlas/Role=production:rwx |

Need to correct the group for the space token |

| tbat05.nipne.ro | 5.0 TB VOMS:/atlas/Role=production | root | root | g::rwx g:atlas:rwx g:atlas/Role=lcgadmin:rwx g:atlas/Role=production:rwx |

d:g::rwx d:g:atlas:rwx d:g:atlas/Role=lcgadmin:rwx d:g:atlas/Role=production:rwx |

Need to correct the group for the space token |

ATLASLOCALGROUPDISK deployment

| SE | owner | group | g: | g:atlas | g:atlas/ <locality> | g:atlas/ Role= production | g:atlas/ Role= lcgadmin | d:g | d:g:atlas | d:g:atlas/ <locality> |

d:g:atlas/ Role= production |

d:g:atlas/ Role= lcgadmin |

subdirectories |

| clrlcgse01.in2p3.fr | root | root | rwx | none | rwx | rwx | rwx | rwx | none | rwx | rwx | rwx | none |

| grid05.lal.in2p3.fr | Stephane Jezequel | atlas/fr | rwx | r-x | none | rwx | rwx | rwx | r-x | rwx | rwx | rwx | no subdirectory, 1 file (test1 by Stephane) |

| node12.datagrid.cea.fr | root | root | rwx | none | rwx | rwx | rwx | rwx | none | rwx | rwx | rwx | jerome:NG |

| lpnse1.in2p3.fr | root | atlas/ Role=production | rwx | none | rwx | rwx | rwx | rwx | none | rwx | rwx | rwx | none |

| lapp-se01.in2p3.fr | root | atlas/ Role=production | r-x | none | rwx | rwx | rwx | rwx | none | rwx | rwx | rwx | SAM:ok |

| marsedpm.in2p3.fr | |||||||||||||

| lpsc-se-dpm-server.in2p3.fr | |||||||||||||

| lcg-se01.icepp.jp | root | atlas/jp | rwx | none | rwx | rwx | rwx | r-x | none | rwx | rwx | rwx | SAM:ok, data08_cos:?, fdr08_run2:? |

| tbat05.nipne.ro | |||||||||||||

| tbit00.nipne.ro |